Explaining AI Explainability: Vision, Reality and Regulation

The successes of AI often feel like magic, with many of us watching from the audience, having no insight into how or why it works. When the outputs of AI are correct or otherwise to our liking, we're pleased. But when the outputs seem wrong of affect us negatively, the "magic" of AI becomes a liability, not a charm.

In every day cases, the failed magic of AI can be annoying:

Amazon, do you really think after buying one mechanical lawn mower I want to buy a 2nd?

Spotify, why do you think after choosing "Yellow Taxi Song" by the Counting Crows that I always want to hear Jakob Dylan's "One Headlight"?

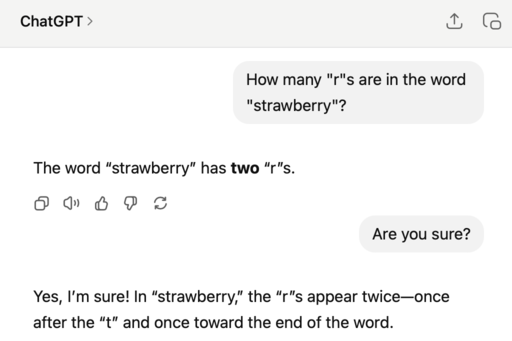

ChatGPT-4, are there really two "r"s in strawberry (GPT-4, in the screenshot below)? ChatGPT-5, are there really three "b"s in the word "blueberry"?

But in high-risk cases that involve fundamental rights, health or safety, being satisfied with “AI as magic” would mean

if an AI algorithm recommends a longer prison sentence, or

if you believe an AI insurance tool resulted in your health costs being unfairly refused by your insurance company, there is no explanation of the decision you can challenge during an appeal, and

if an autonomous AI system is involved in traffic deaths, it cannot be determined if the AI or the driver was at fault.

These scenarios are taking from real events. In the first, the US Supreme Court's 2016 refusal to hear an appeal from a Wisconsin man who claimed that the COMPAS risk AI unfairly led to a longer prison sentence, upholding the Wisconsin Supreme Court's ruling that favored the intellectual property rights of the company selling COMPAS to US courts rather than Loomis' request to due process by getting access to why his prison sentence was longer.

In the second scenario, a 2023 class-action lawsuit against UnitedHealth Insurance Group and their usage of AI to approve or deny cost reimbursement ("insurance claims") is ongoing at the time of writing.

An example of the the third scenario is a Florida, USA ruling that Tesla bears partial responsibility for accident victims involving a Tesla car running on its AI Autopilot software. Tesla has been ordered to pay over 240 million USD in damages.

In the merely annoying examples of apparent AI failures, it would be useful, though not essential, to have some explanation of why ChatGPT misspells words, why Amazon recommends you buy an extra lawnmower, or why Spotify thinks you always want to hear "One Headlight" by the Wallflowers. Rather than understanding as a user, I'd actually prefer that the people developing the AI behind ChatGPT or the recommendation AI powering Amazon and Spotify can understand why it misfires as it does to make the product better.

For the more serious examples of possible AI mistakes leading to death, loss of liberty or bankruptcy, then it's not enough for the developers of these AI systems to understand why (thought that's key as well). The end users, lawyers, regulators and judges need to be able to appreciate sufficiently why an AI system behaved a certain way to ensure the key rights are upheld, like due process, right to life and health, right to education and right to employment.

In this blog series, we guide you through the most important notions of explainable AI to help you understand where it can, and at present cannot, help your company's AI products.

In this first post, we explain what explainable AI is, and set out the vision for the research programs dedicated to explainable AI.

The second post will take a critical look at what the best research of the day tells us about explainable AI, with special attention to how and where current explainable AI can be used effectively. We also point out where explainable AI is still very much a work-in-progress. Unless your business is dedicated to selling explainable AI, you should be cautious about devoting much resources or confidence to these work-in-progress areas of explainable AI.

Finally, we look at regulation related to explainable AI, including the EU General Data Protection Regulation (GDPR) and AI Act. We also review some non-binding guidance from medical and financial regulators that give insight into what the regulation of the future might look like with respect to AI explainability.

Playing the "why" game to understand explainable AI

The topic of AI explainability is difficult for at least two reasons. A main reason is that the terms involved can take on very different meanings to different people and in different circumstances. On defining artificial intelligence, we've found in workshops and trainings that the metaphor of a zoo, rather than a dictionary definition, is more useful. Princeton academics Arvind Narayana and Sayash Kapoor start their book "AI Snakeoil" with a thought experiment about a world in which all modes of transportation are referred to by a single word, "vehicle.". AI is not one thing, but rather many related things, so our undifferentiated usage of calling very different examples like computer vision, language generation and adaptive robots all by the same name makes the already difficult task of understanding AI even more challenging.

A second reason AI explainability is challenging we have already seen in the above examples, in which we mentioned at least three different usages of asking "why". Different roles and different situations result in different requirements for an explanation to be satisfactory.

To see how the concept of explanation also requires unpacking, think back to your childhood and a game many (all?) of us played: the "why" game. The preschoolers years of 3-5 are called the "why" phase, because at this age, children begin (and rarely stop) asking why. Why is grass green? Why do people burp? Why can fish keep their eyes open under water ?

At some point, when faced with questions beyond the parent's knowledge, a parent might make up an answer just to get peace. These white lies work against the child's goal of understanding of the world, but have the benefits of satisfying their curiosity itch and maintaining their faith in their parents' omniscience. Getting an explanation, even a fabricated one, gives them a feeling of reassurance and can help reduce anxiety. These less-than-faithful answers to "why" questions can also increase the net-promoter-score of the parent, at least temporarily :)

When the child enters school, "why" questions are also used to challenge decisions. I remember in music class everyone wanted to get handed out drums or cymbals. If you were at the end, you got only a dinky little triangle. Invariably, the student with the triangle would ask the teacher, "Why did I get the triangle?" As professor Bob Duke pointed out during my PhD course in pedagogy ('learn how to teach'), this question isn't just a question, but an expression that the asker is unhappy and wants a different outcome. The child asks "why" to challenge a decision that went against their interests.

Fast-forward to a university application interview, and the roles the "why" game reverse. A grown child seeking admission might be asked "Why are you a good fit for university X"? This why question is a form of informed consent. The university is deciding whether or not to give consent to your application (i.e. give you a university spot), and wants to base that decision on reliable, detailed information.

Finally, if the now adult's mother dies after a routine operation, she and her widowed father justifiable ask "why" their mother was taken from them. If, as happened to family friends, the explanation is that a surgeon left surgical equipment in the mother's body, leading to an infection and death, this "why" question is used to determine liability, or who is at fault when something goes wrong.

Though the "why" game we see that there are several related, yet distinct notions of explanations that matter in our lives. Each of these matters in the context of AI explainability, and each requires its own treatment.

"Why" to understand and debug the world. The early-language "why" game uses explanations as a way to understand the world around us in order to function better and solve problems that arise ("debug"): this corresponds to the initial and still primary use of explainable AI techniques, whereby AI solution developers use AI as a tool to debug and improve their work.

"Why" to feel reassured. A second function of the early-language "why" game is to establish trust, even if that trust is poorly placed. This occurs when a parent grows weary of playing the "why" game and begins fabricating answers to placate the child. On the child's side, these white lie "why" answers mean the child can maintain their trust that parents know everything and that the world makes sense. In terms of AI, this "explainability" is how end-users feel more comfortable using AI solutions that come with explanations, even if the explanations are themselves questionable or even wrong.

"Why" to challenge a decision. In real life, the US justice system uses an AI algorithm to predict the likelihood that a convicted criminal will repeat the crime after being released from jail. This prediction is given to the judge to help determine how long the person remains in jail. A Wisconsin Supreme Court case in 20xx ruled that a convicted person had no right challenge the technical implementation of the AI algorithm that led to a longer jail sentence.

"Why" for informed consent. A real life, high stakes example of explainable AI comes from medicine, where the notion of "informed consent" means that a patient should be given intelligible reasons for a health recommendation in order to make a decision. This form of explanation can and does break down for AI applications, as seen in the case of Black patient Frederick Taylor. In 2019, he visited a hospital with heart attack symptoms, but was sent home due in part to an AI algorithm predicting his heart-attack risk to be minimal. When he suffered a heart attack 6 months later, he learned that the predictive algorithm was biased towards white patients (here "biased" means was most accurate; see Chapter 8 of Hidden in White Sight: How AI Empowers and Deepens Systemic Racism).

"Why" to assign liability. In 2022, an Air Canada AI chatbot advised a traveller incorrectly on how to obtain a discount on his flight. The British Columbia Civil Resolution Tribunal decided in favor of the traveller, awarding him about 800 Canadian dollars in damages for the AI mistake. As AI becomes embedded even further in our cars, toys, medical devices (and decisions), not to mention the running of police forces, border security, military and the judicial system, courts will need to decide who is responsible for mistakes made by AI.

The vision of explainable AI: faithfully winning the "why" game for all questions

The feeling of "magic" we have when AI works well, these "How did they do that?!?!" moments, are why businesses are excited about AI as a way to offer services previously only dreamed about and solve previously unsolvable problems. In the case of machine learning ("ML"), the dominant form of AI in which a computer algorithm is "trained" on historical data, the non-technical explanation of how ML can achieve better-than-human performance on a range of tasks often mentions how ML can detect previously hidden patterns in the historical data used to train it.

The vision of explainable AI is to on the one hand achieve both human-level (or better) performance "just" by feeding in historical data (in contrast to non-AI software that is programmed line-by-line), while on the other hand expose whatever pattern the ML found to us humans so we can appreciate and evaluate why the AI system gave the output it did.

This, then, is the dream of explainable AI: something like DeepMind's game-of-go playing AI AlphaGo, and the famous "Move 37" for all AI use cases. In the 2016 epic game of Go battle between Google DeepMind's AlphaGo and the Go master Lee Sedol, game two featured a pivotal move by AlphaGo, Move 37, that at first seemed a mistake, and required a full 15m of thinking before Sedol formulated his next move. This strange move 37, however, turned the course of the game to the AI's favor. After time to reflect on this magical Move 37, European Go champion Fan Hui could recognize the beauty of this move.

Moving beyond AI that plays board games, the outcome of an AI system may not be something a human would have come up with (there's some magic to it), but when presented with the pattern behind the "why", we humans should be able to recognize its correctness, perhaps even brilliance.

This same ideal AI explanation can also be seen in the pre-computer age of Arthur Conan Doyle's detective character, Sherlock Holmes. In the first of the Holmes stories, Study in Scarlet, when Holmes first meets his to-be partner, Dr. John Watson, he deduces (more precisely, "abductively reasons") that Dr. Watson has just returned from war in Afghanistan. This deduction seems magical, or at least inexplicable to Watson. Later in the story, Watson presses Holmes, who explains that he was clearly both a medical man and soldier from his bearing, hence an army doctor. His tan complexion means he's been in the tropics, while his exhausted expression and wounded right arm limited the possible locations to one place: Afghanistan"

What began as a magical deduction becomes, in Watson's words, "simple enough as you explain it". We'll call this type of explanation a

Sherlock Explanation: An explanation of a seemingly magical AI output that, when provided to an affected stakeholder, makes the outcome "simple enough."

Note a few aspects of a Sherlock Explanation that we'll come back to in the rest of this blog series.

First, the explanation is relative to an affected stakeholder, and what is important to him or her.

Second, the explanation is "simple enough" with respect to this stakeholder.

Third, though not explicit in our definition, the explanation is faithful, meaning it is an accurate representation of the process by which Sherlock (or an AI) came to it's conclusion.

So if the vision of explainable AI were to be realized, a Sherlock Explanation would mean

a developer of AI systems could use explainable AI techniques to figure out why a model made a mistake, and thereby improve its performance (development and debugging),

an end-user of an AI system could use explainable AI to reduce their anxiety of the new technology and trust its outputs (fostering adoption),

a graduate student expelled for allegedly using ChatGPT to write his application material could use explainable AI to show that the AI-detection algorithm used by his university made a mistake in his case (decision challenge),

a patient being recommended a novel surgery based on an AI recommendation could examine the output of explainable AI to correctly decide whether or not to consent to the surgery,

a jury in a wrongful death case involving autonomous driving could use explainable AI outputs for the car behavior to determine if the fault was with the AI or some other factor

Looking to popular culture, the BBC's rendering of Sherlock Homes gives a useful test for whether or not explainable AI succeeds. In their Sherlock Holmes, on several occasions Holmes explains one of his seemingly magical deductions, after which the person in question replies, "Oh, I see. That's not as impressive as I thought." In these scenes, the recipient of the explanation is then able to independently, faithfully and conclusively evaluate an output that previously seemed magical.

Article Series "Explaining AI Explainability"

- Explaining AI Explainability: Vision, Reality and Regulation

- Explaining AI Explainability: The Current Reality for Businesses