Is AWS AppRunner the Worst Way to Run Containers?

Let’s talk about deploying applications to AWS.

AWS famously has more than 17 ways to run containers. This happens when you are the biggest and one of the oldest cloud providers. The way AWS fixes this issue, usually, is by releasing even more services to run containers, probably in the hope that this new way well become the way, at least for some kind of workloads.

Containers, as you know, solve the packaging issue for your application. Once packaged as a container image, you can deploy it anywhere. Ideally, you want to just say “run this container image behind this domain” and call it a day.

In reality, you always have to take few more steps - you need a load balancer, you need an autoscaling, you need to connect your containers with the database and so on. In case of Kubernetes, you need even more than that, with lots of moving pieces, Ingress Controller and so on - but let’s not talk about Kubernetes any more today.

The easiest, most flexible and “serverless” way to run containers on AWS is ECS, together with Fargate. It has just a handful of simple concepts, and to expose your application you only need to provision an ALB and connect it to your ECS Service. It’s not too complicated, but it’s also not ideal.

Google Cloud does it a bit better - they have a service called Cloud Run, which just takes a container image, gives you a URL and that’s it. It scales your application automatically, by using serverless functions. No load balancer to handle, everything is nice and easy. We are using Cloud Run for our product Claimora - it’s a simple, easy to use timesheet software, that is built for European businesses that need to comply with digital time tracking regulations, and it works great both for independent freelancers and for companies.

Enter AWS AppRunner, Amazon’s answer to Cloud Run. Finally, we don’t need to mess around with VPC, with ALB, with anything, really. We can just take a container image and run it on AWS, and pay only for containers themselves. Or is it really that simple? Let’s find out.

Some time ago, we released an open source tool “voice-to-gpt”. It allows you to talk to OpenAIs GPT - and it will talk back to you. It’s basically a voice assistant, powered by GPT. We already have container images built and publicly available for anyone, thanks to GitHub Actions and GitHub Package Registry. Let’s try to deploy it using AppRunner.

I am going to do that directly via AWS Console, doing a shameful ClickOps. At the end of the article, I will tell how to do this properly.

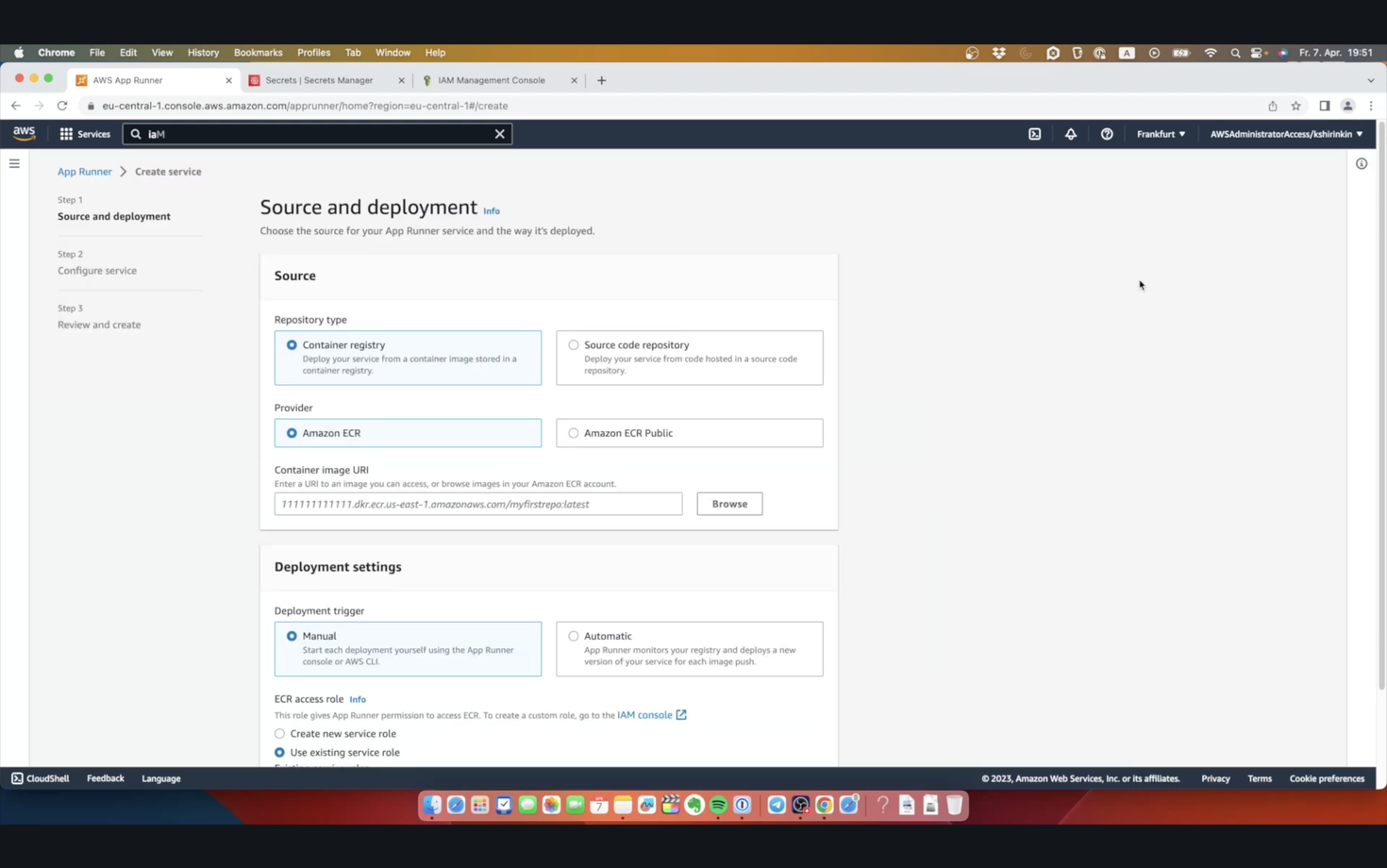

Applications are called Services in AppRunner. Let’s create a new one. Already on this screen, we see a problem: we can either use container images from AWS ECR, or we can connect our GitHub.com repository. So if you are using any other container registry, or any other source code management system, you are out of lack.

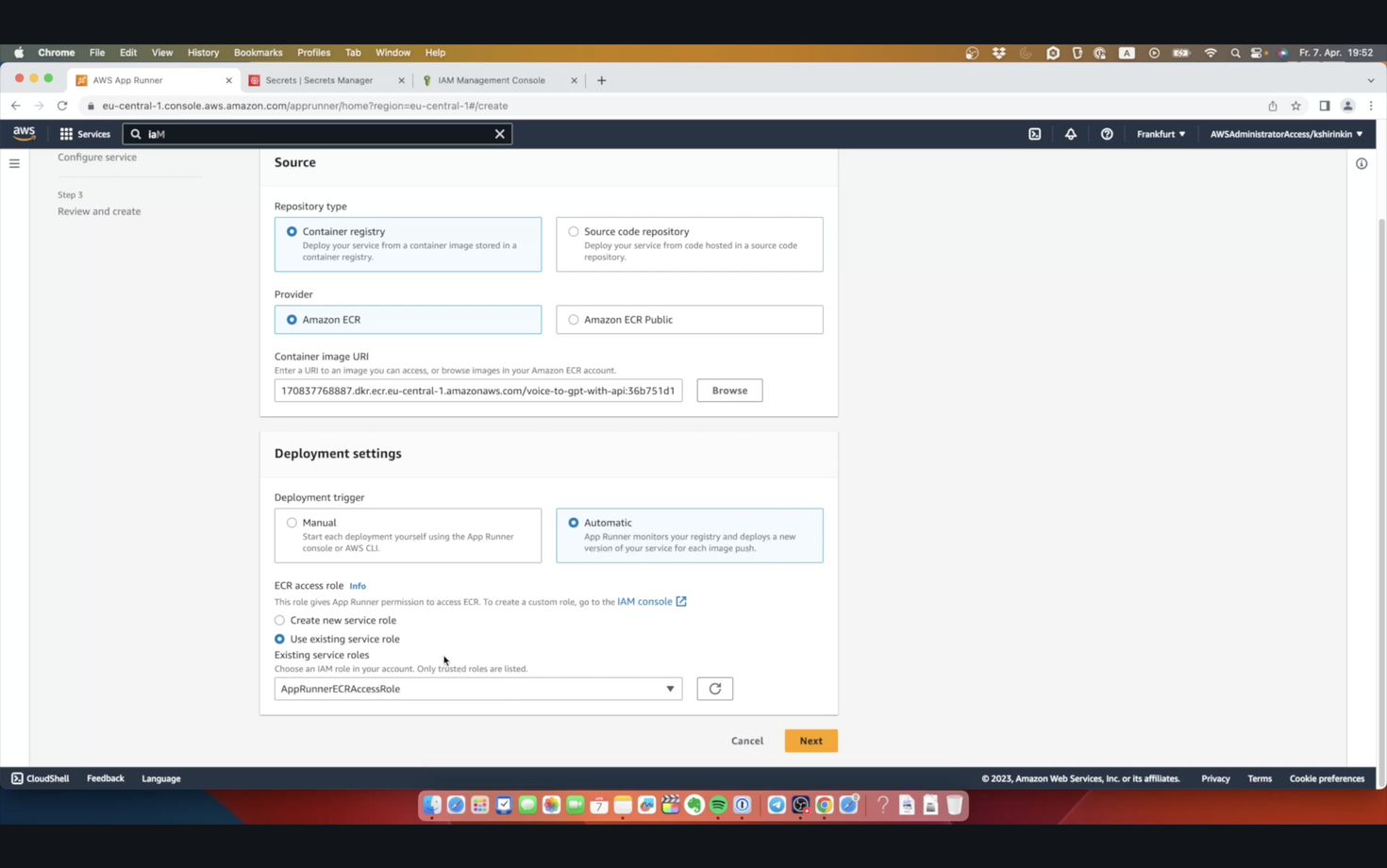

Let me choose the image and the tag. We can enable automatic deployments whenever there is a new image tag, which is nice, as long as it’s working. We also need to create a new service role so that AppRunner can access ECR.

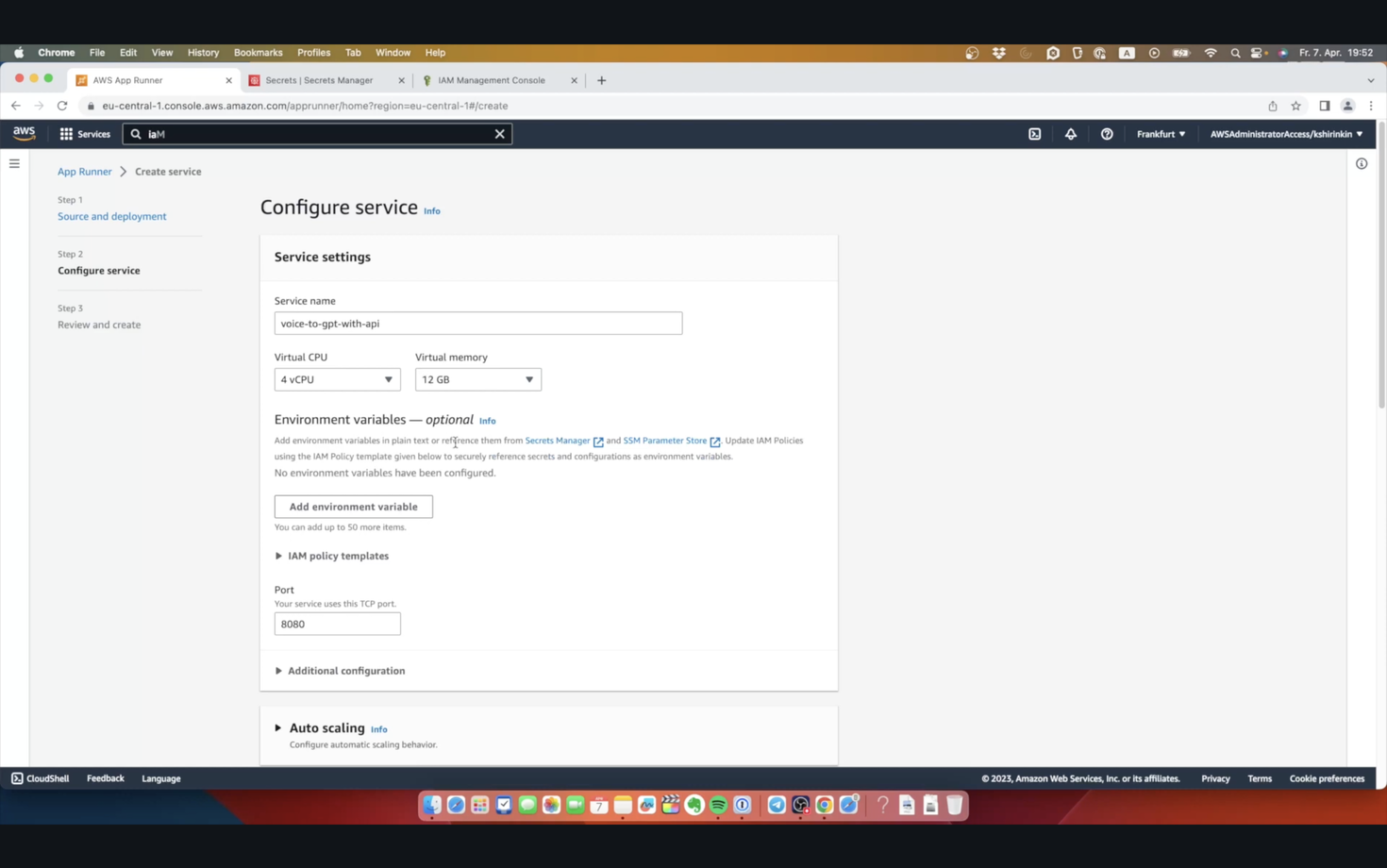

On the next screen, I will fill in the service name as “voice-to-gpt-with-api”. Next, we see another AppRunner limitation - our containers can only have a maximum of 4 vCPU and 12Gb of RAM. This is not a huge deal, because that’s a limit per container - AppRunner scales the number of containers automatically for you, and it’s actually good to have many smaller containers than a few huge ones.

Next part is environment variables. I need to fill that in with an OpenAI API Key, so that this app is able to access GPT and Whisper APIs. I have the key in AWS Secrets Manager, so I can just reference an ARN here.

Next I need to change the port to point to port 5000, default Python Flask port.

Auto-scaling part is probably the most interesting here. We can tell AppRunner how many instances at the same time to create, and we can set the concurrency. Instances means a number of containers, each having the number of vCPU and RAM we set earlier. Concurrency here means how many concurrent requests can a single container process. So if my Python app can handle at most 5 requests, we would set 5 here. As soon as container reaches this limit, AppRunner will create more instances.

It’s a curious concept, because auto-scaling in AppRunner is always bound to how your application work, and not to CPU or Memory consumption. You need to know how your app processes requests in order to be able to configure this autoscaling, otherwise you might end up with performance issues, or you will overpay for too many instances. In other words, you need to do your homework to be able to configure AppRunner correctly. As for this service, I will just use the default configuration.

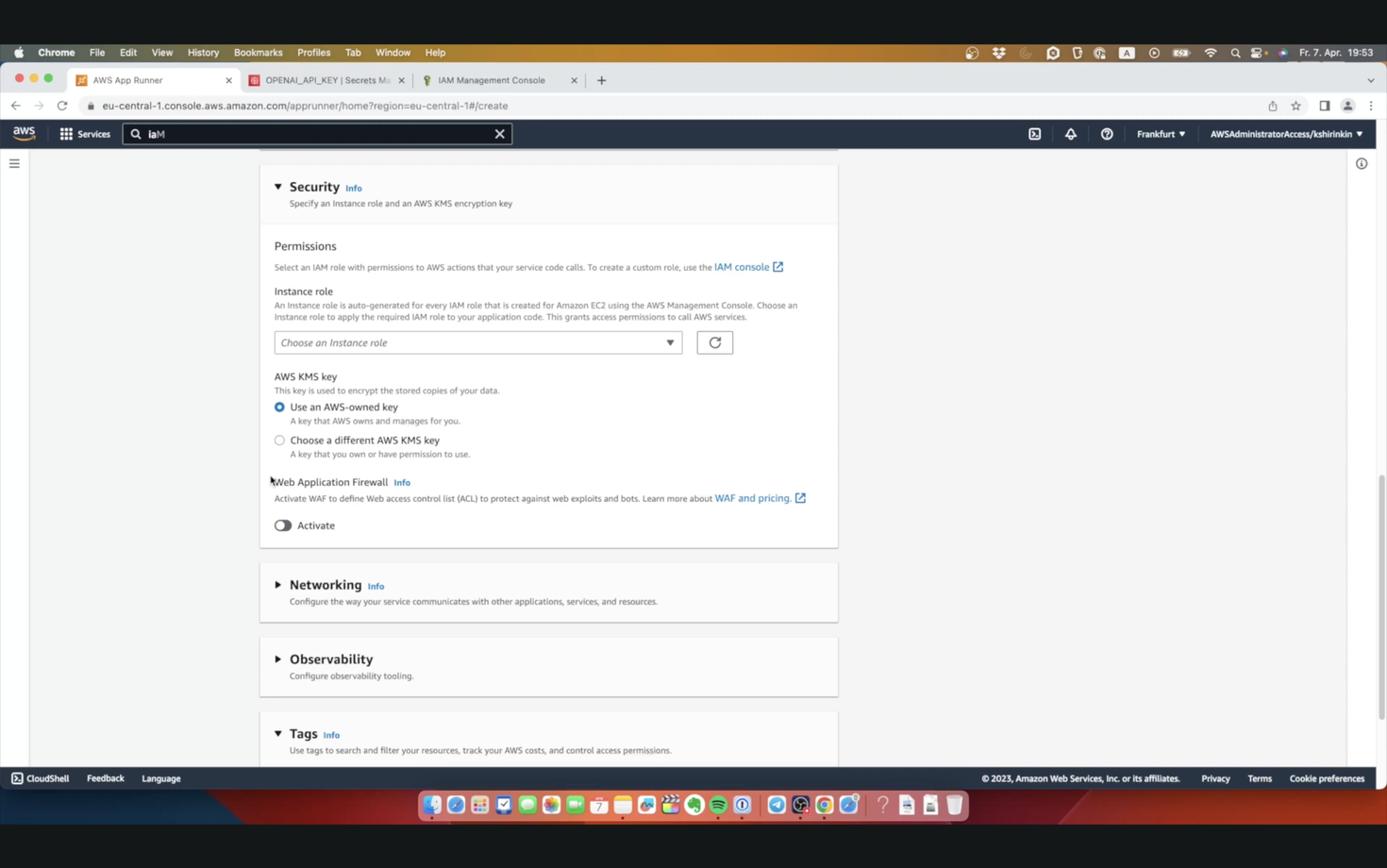

Next we need to configure an instance role, an IAM Role that our instances will use. It’s impossible to skip this step, which means you have to know how IAM works. I’ve already created the role in advance and will just pick it from the dropdown.

We can also enable Web Application Firewall for our app, enable tracing and connect this service to a VPC, if we have a database running there, for example. I will skip all of those options, because I really want to see this service up and running!

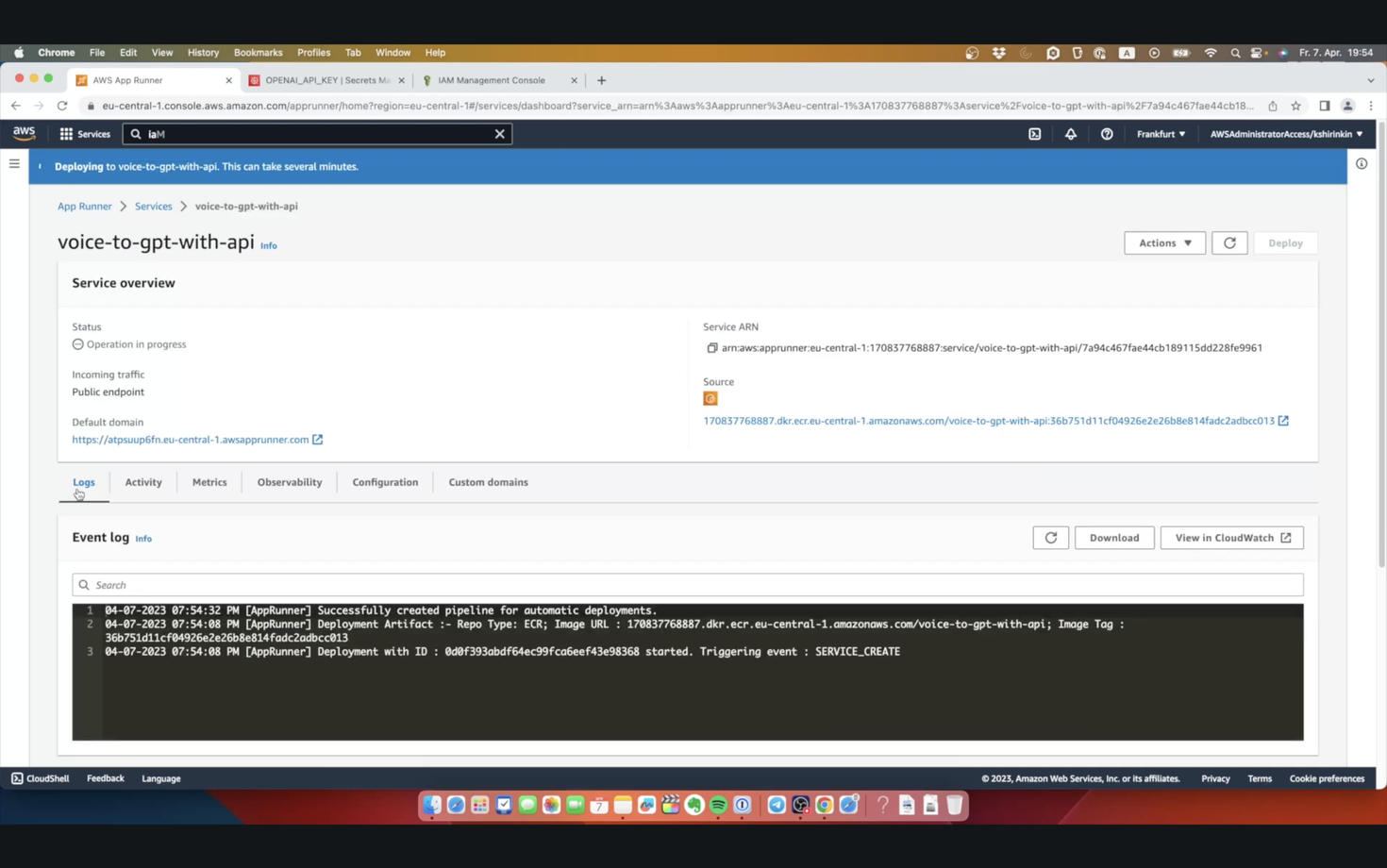

Finally, I can review my configuration and deploy the app!

We can see here an averagely nice interface, with logs, metrics and various service metadata. We can see a default domain, assigned to this Service - we could also use our custom domain, of course. While it’s being created, let’s check metrics. Here at the bottom, we can see a metric for concurrency, which can be extremely useful to properly tune the auto-scaling configuration for this service.

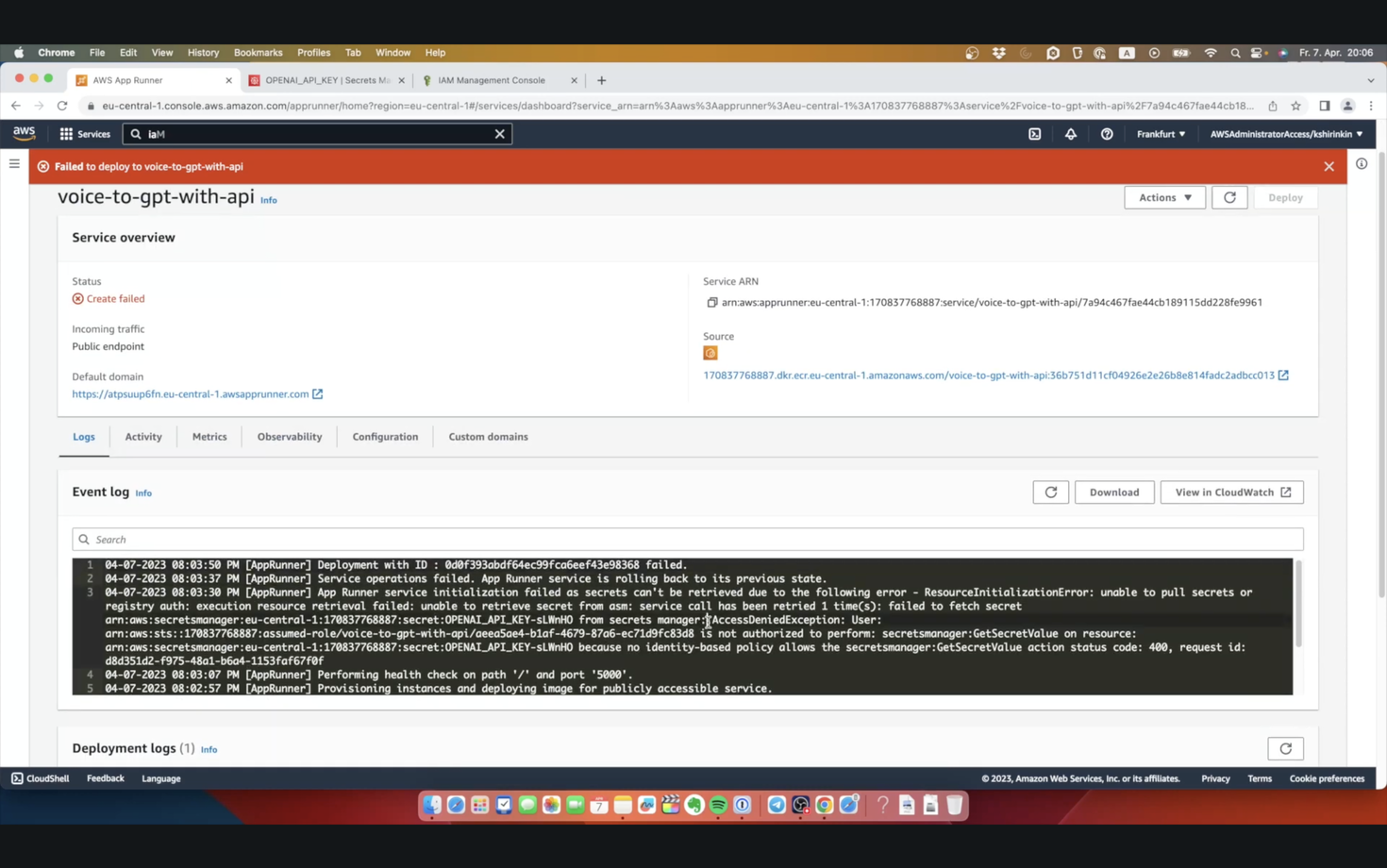

If we wait long enough, we will see the error message, telling us that IAM Role can’t access the secret. That leads us to learning two more issues with AppRunner:

It does not try to make our lives easier in terms of using AWS. We went through this kind of user-friendly UI, and this UI never gave us any hint that to use this secret, we need to adjust the role!

We can not simply modify the configuration of the service if it failed the initial deployment. We have to delete it and re-create from scratch. Yes.

So, I guess, let’s do everything from the beginning.

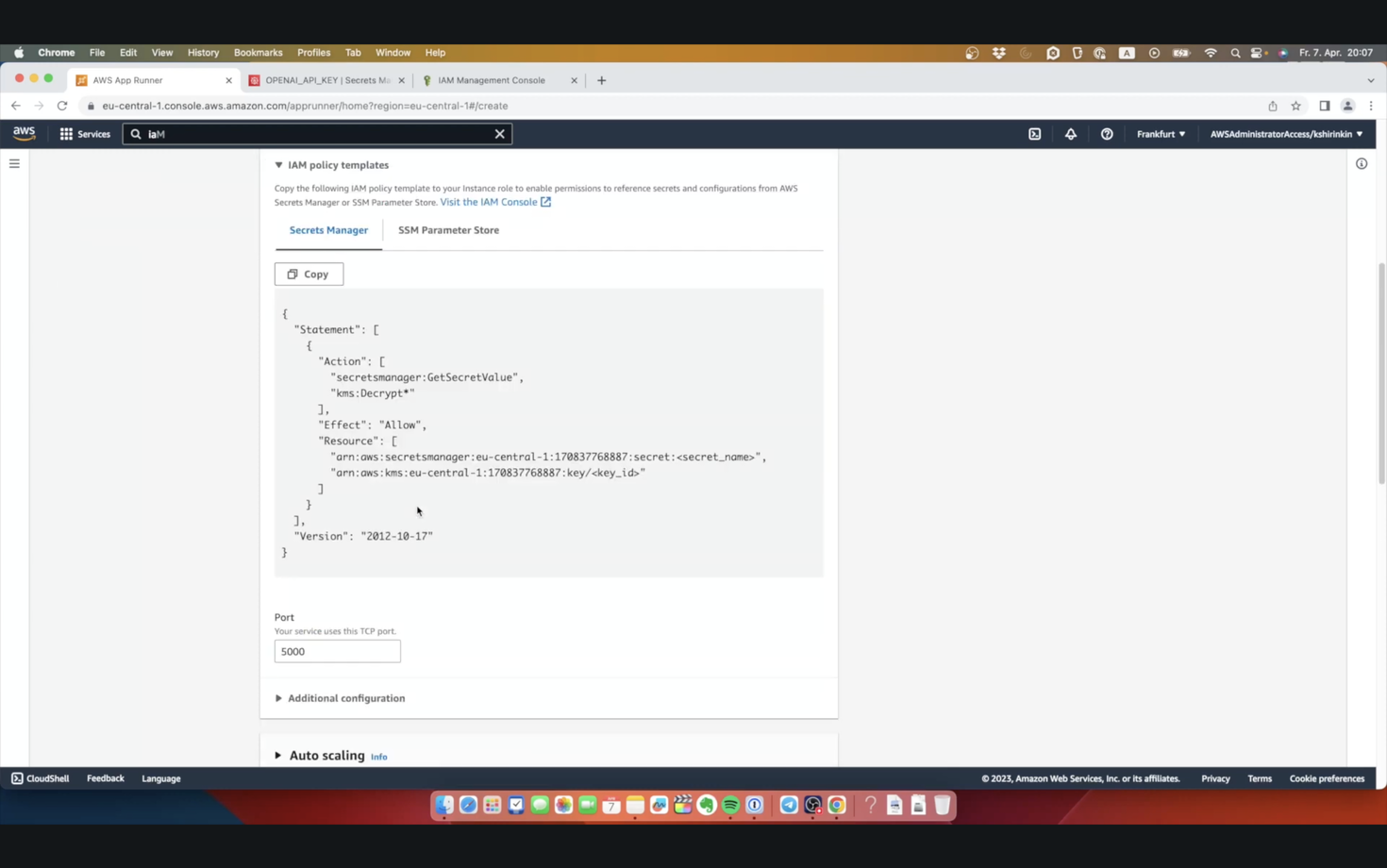

At this step, if we pay attention this time, AppRunner offers us the policy template. It does not have our exact secret filled in, but hey, it's something! I can copy this and adjust it inside the role inline policy.

Now let me fill in everything again and deploy one more time.

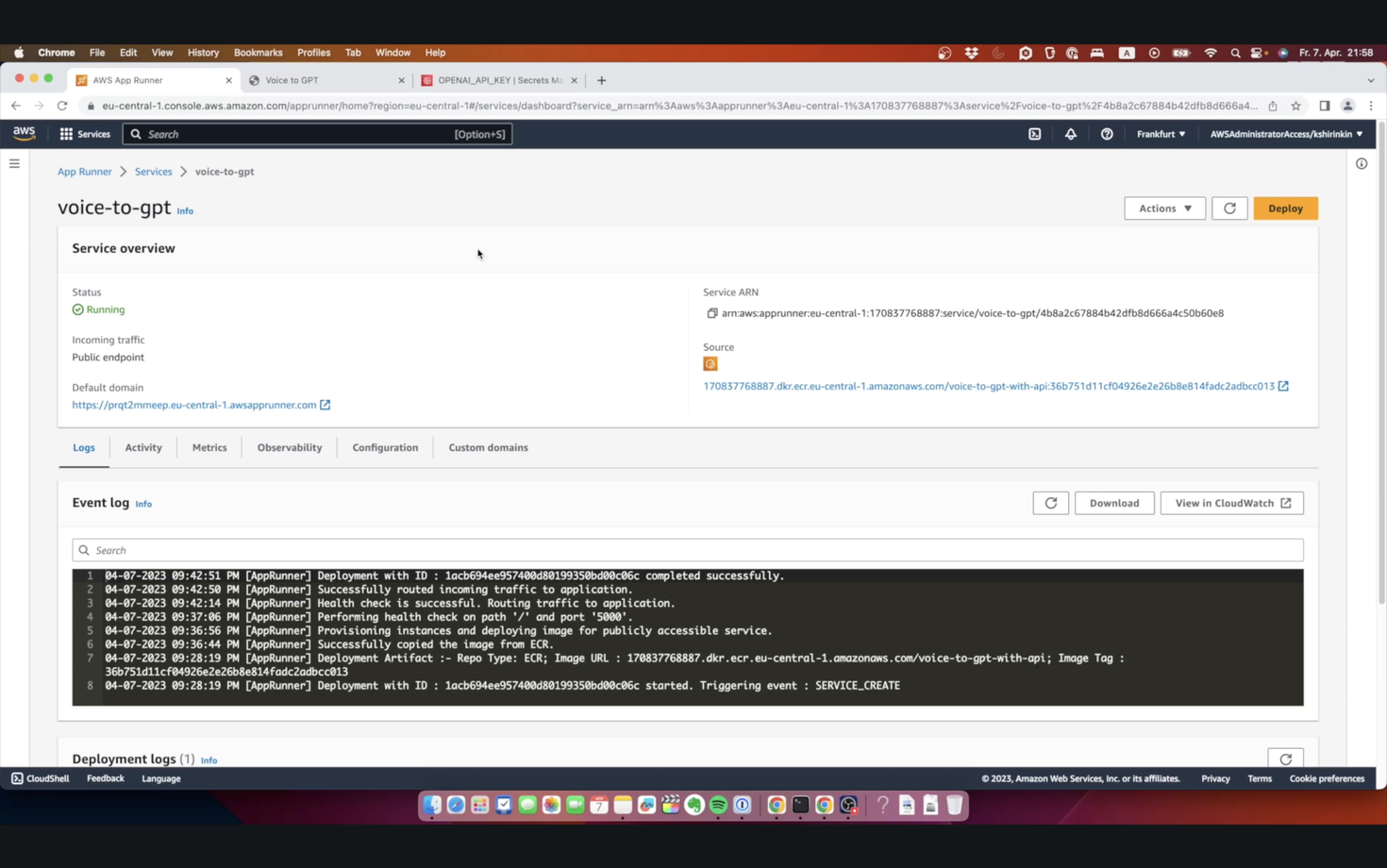

Finally, we can access the application. As a reminder, it’s a voice assistant based on GPT - you talk to it, and it talks back to you. It’s only an initial version, and we will improve it in the future. Check out our channel for a video from Pablo with more details on how it works, and also listen to DevOps Accents, episode 8, where Leo and Pablo discuss this tool.

Now that we have an application up and running with AppRunner, we can make some conclusions about this service. I know that from the article it seems simple - in the end, it only took us a few minutes to deploy the app. In reality, I had to spend a ridiculous amount of time to get to this point. I showed you one example - wrong IAM policy requires re-creation of everything. But there is more. For example

What if you make a typo in a secret name?

What if you misconfigure a health check?

What if you didn’t get the VPC config right?

When you start using AppRunner, every tiny mistake forces you to re-create the whole thing from scratch. That’s far away from being a developer of operator friendly. On top of that, I personally encountered a couple of annoying bugs. For example, AppRunner was telling me that the healthcheck was failing, while at the same time I could see in application logs correct responses to AppRunner health check requests. Only another re-creation of the Service fixed the issue, further reducing my confidence in production-readiness of AppRunner.

Another problem is that AppRunner doesn’t make anything easier for you. You still need to know how to use VPC, IAM, SecretsManager, CloudWatch and all the other tools. You even still need to think about properly configuring the auto-scaling, making it somehow.. less serverless, I guess?

And honestly, it should not be in such state - it’s been two years since the initial release, and it’s still hard to find any significant benefits. The only win I found here is that I don’t have to set up an ALB and ACM to get my app available on the Internet. But in return, I get something of a beta version of GCP’s Cloud Run, with frustrating bugs and a price of a minimum of $30 a month - more or less the same as an ALB with ECS Fargate Spot setup, and way more than a similar AWS Lambda based infrastructure.

So, I’ve mentioned that I will tell you how to do this deployment properly, with automation, without any ClickOps. Our recommended way to do that is to use AWS Copilot CLI. It’s an open source tool from AWS, that was built to make it as easy as possible to deploy containerized applications, both to ECS and AppRunner. You can easily use it inside CI/CD pipeline, and it automates everything in a proper way, using CloudFormation - and at this time, it has enough features to handle most of your needs. We have a video about AWS Copilot.