Job Execution Systems: What is the difference between Jenkins, Rundeck, Airflow, Gitlab CI and others

Let's say you have a job to run.

This could be patching a server, making a database backup, validating configuration files, processing some data or whatever else you have to do.

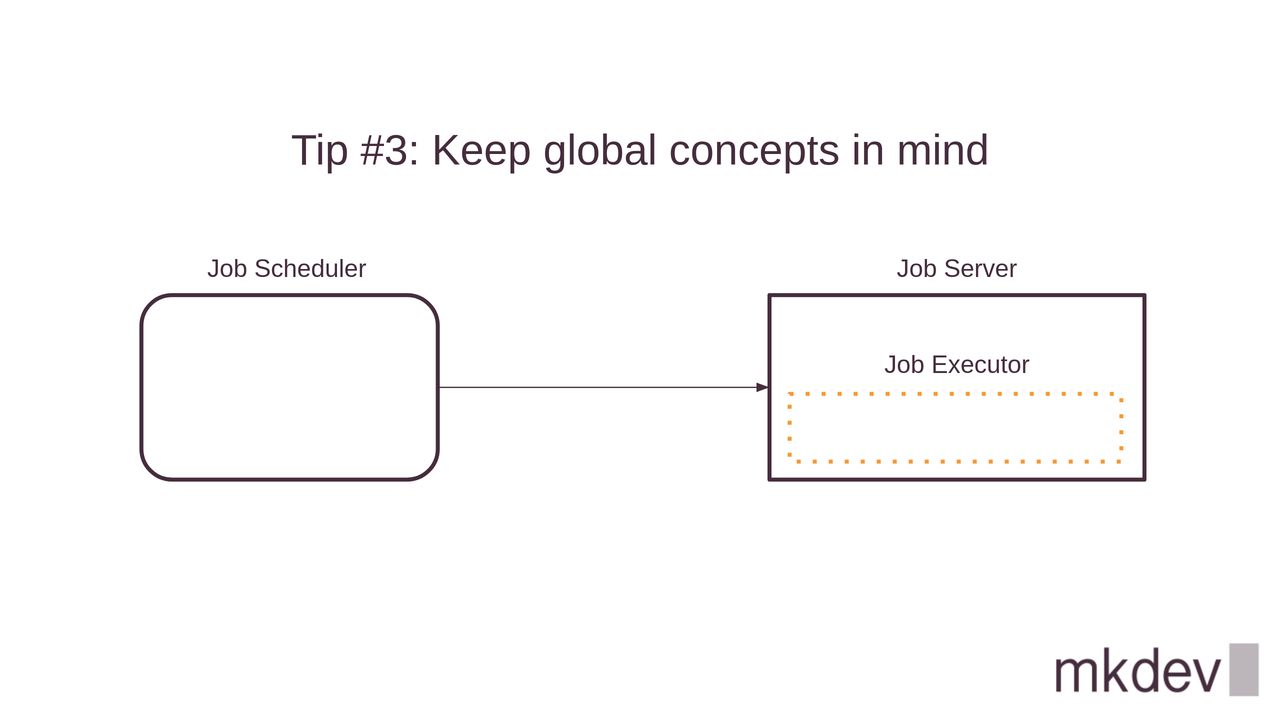

The first decision to make is where to run this job. Let's call such place a "job server".

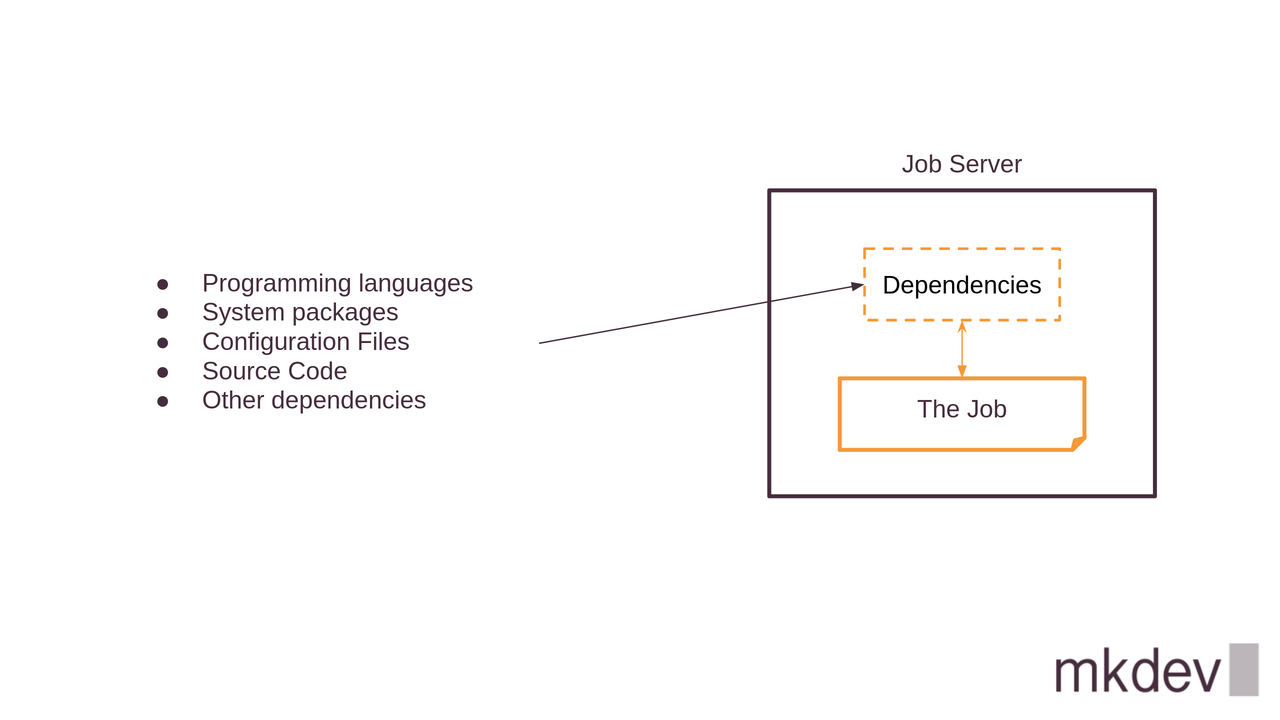

Your job relies on certain programming languages, system packages and source code. "A job server" has all of them available.

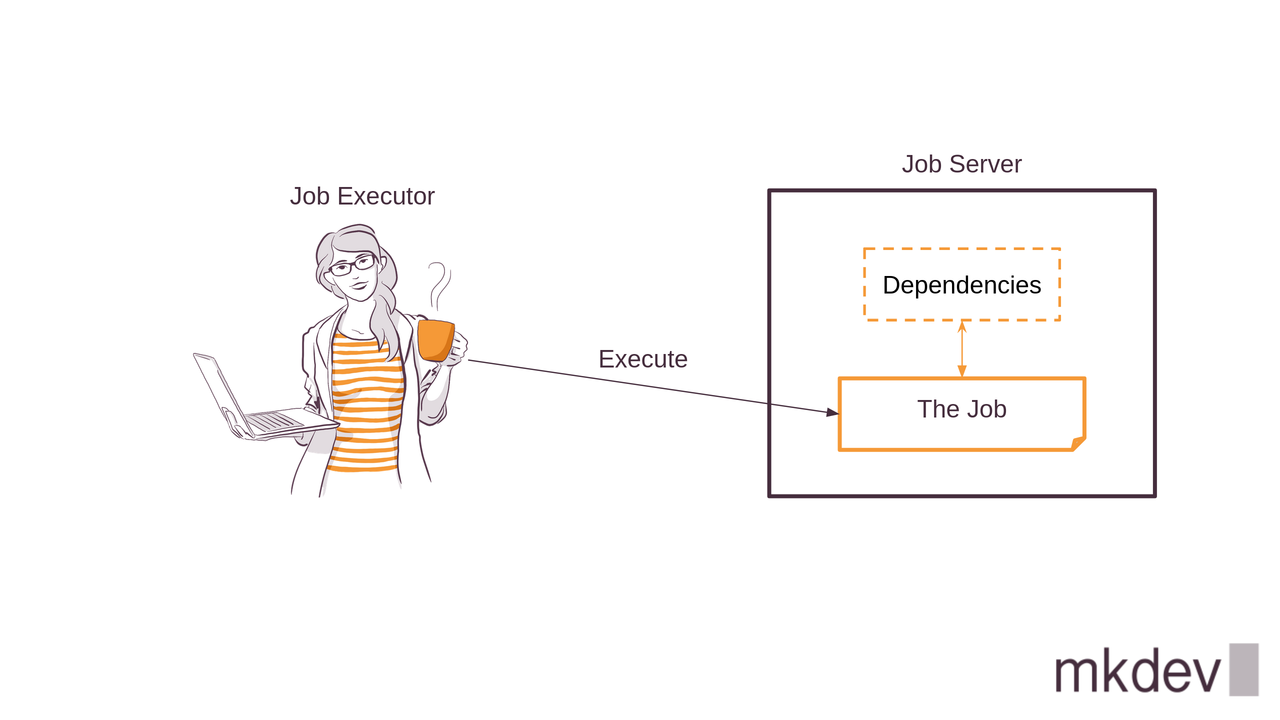

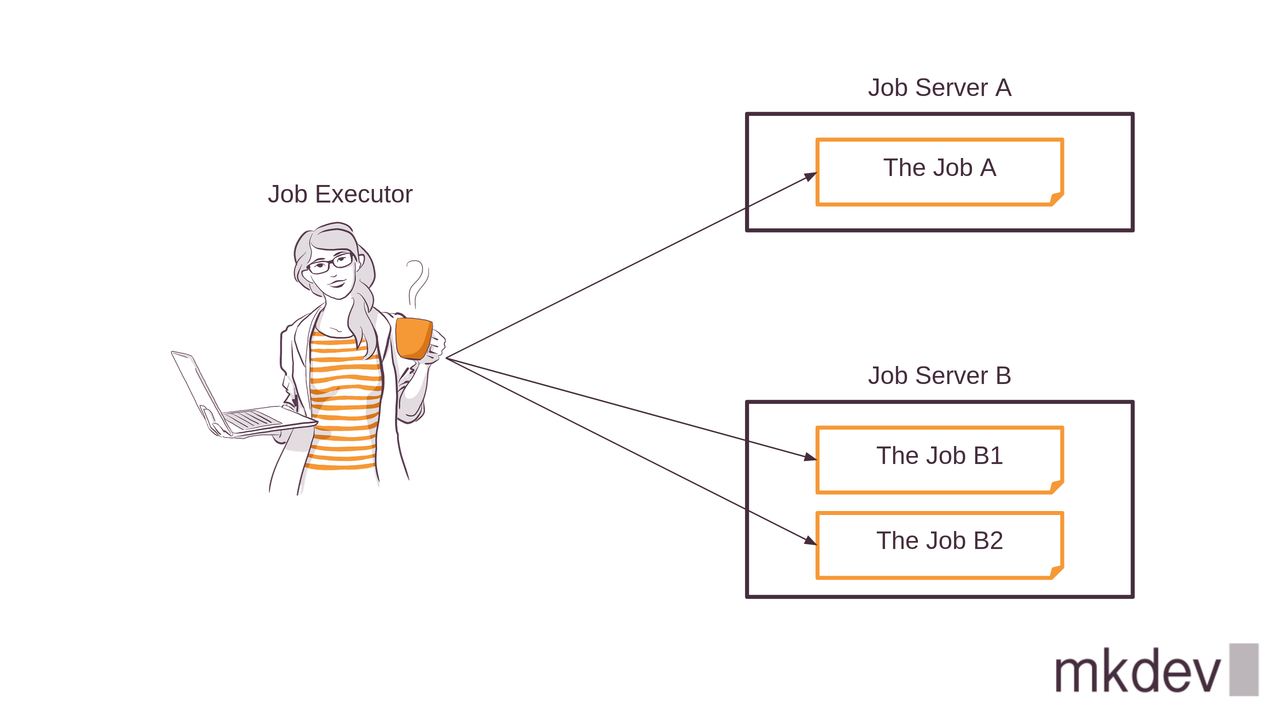

The next decision to make is who exactly is running this job. Let's call it a "job executor". At this point, you are the "job executor" - you go to your "job server" and just run the job by hand.

But then you have to run another job, which needs another "job server". And then another one. As times goes by, you end up with lots of various jobs and many different "job servers" to run them.

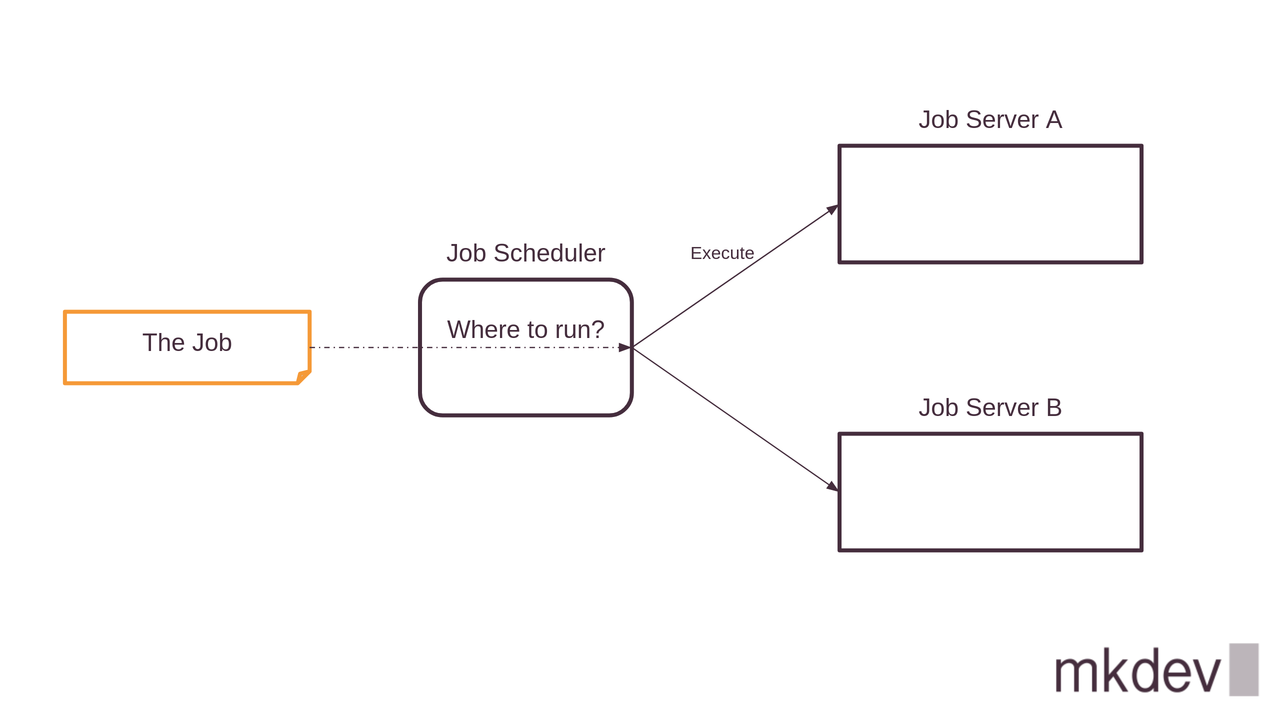

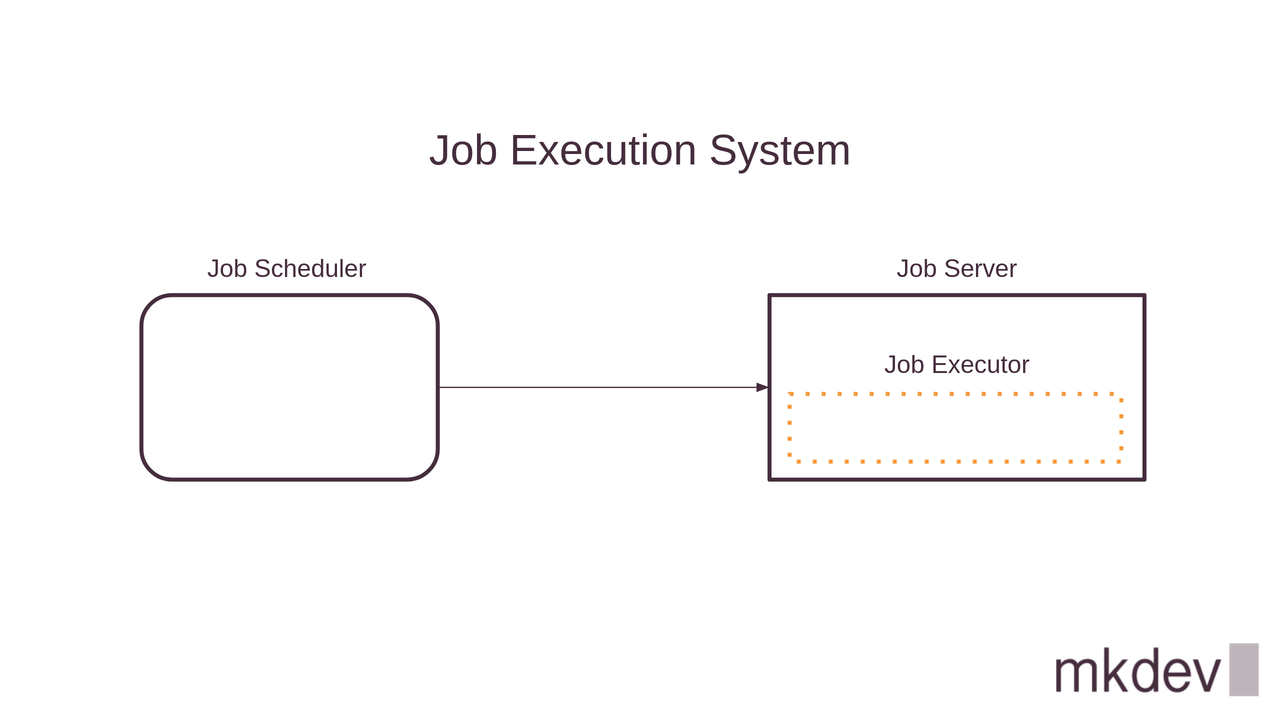

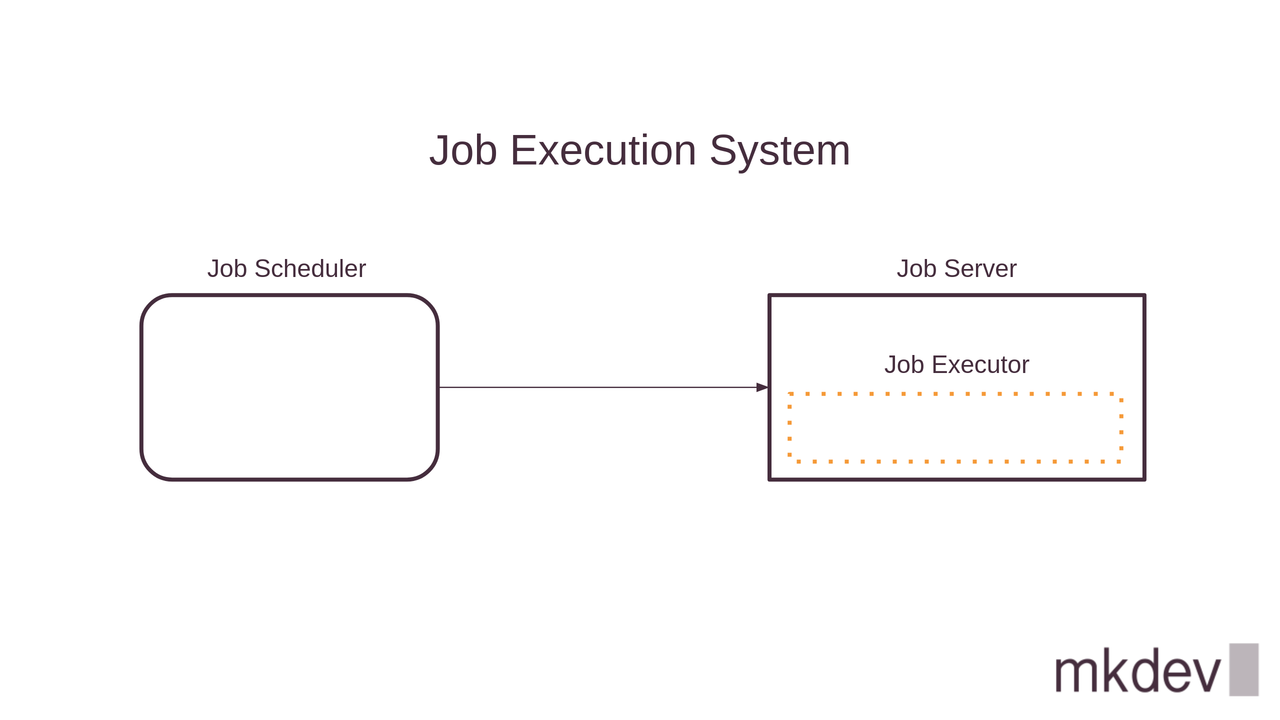

You don't want to be the "job executor" anymore. So you need another part of the system, which we will call a "job scheduler".

The very basic feature a "job scheduler" needs to have is to be able to understand on which particular "job server" to run your job.

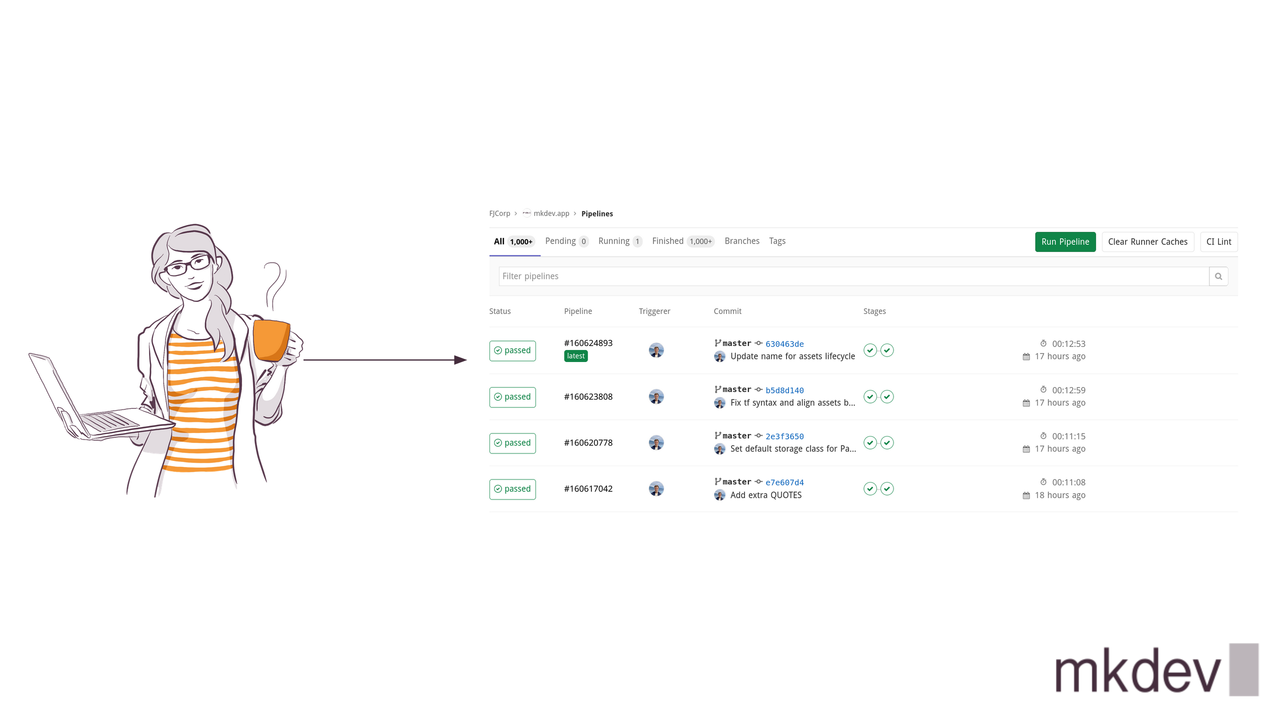

In addition, it would be very convenient if this "job scheduler" also had some kind of an interface, where you can see the history of your job executions and from which you can trigger your jobs.

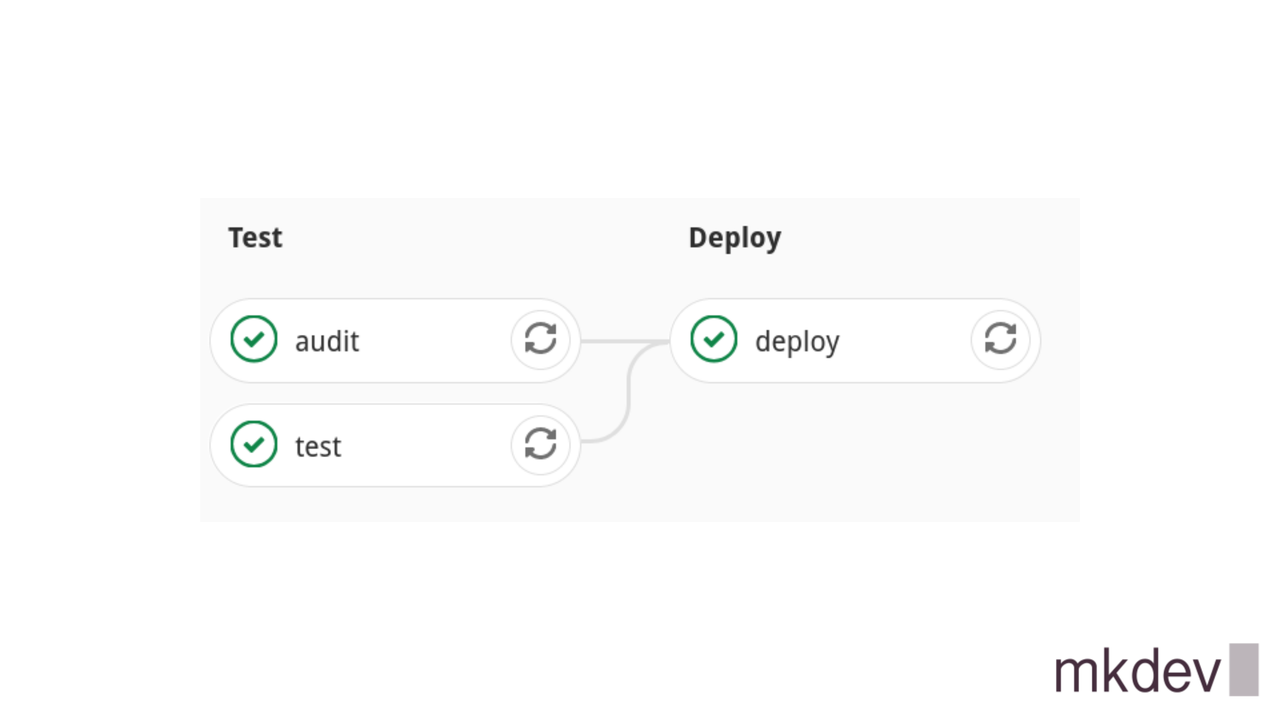

A really good "job scheduler" would be also capable of chaining your jobs together, allowing you to build complex workflows and pipelines.

Ideally, by the way, you don't even start jobs yourself anymore. You let your automation do it for you, because your "job scheduler" provides a number of ways to trigger jobs, like an API or a scheduled execution.

All together - the job executors, the job servers and the job scheduler comprise something we could call "a job execution system".

This simple concepts are hidden under different names inside numerous different systems. Those systems were built specifically to solve particular class of job execution problems. Let's take a look at some of them.

One of the most popular applications of a job execution system is to provide CI/CD for your applications.

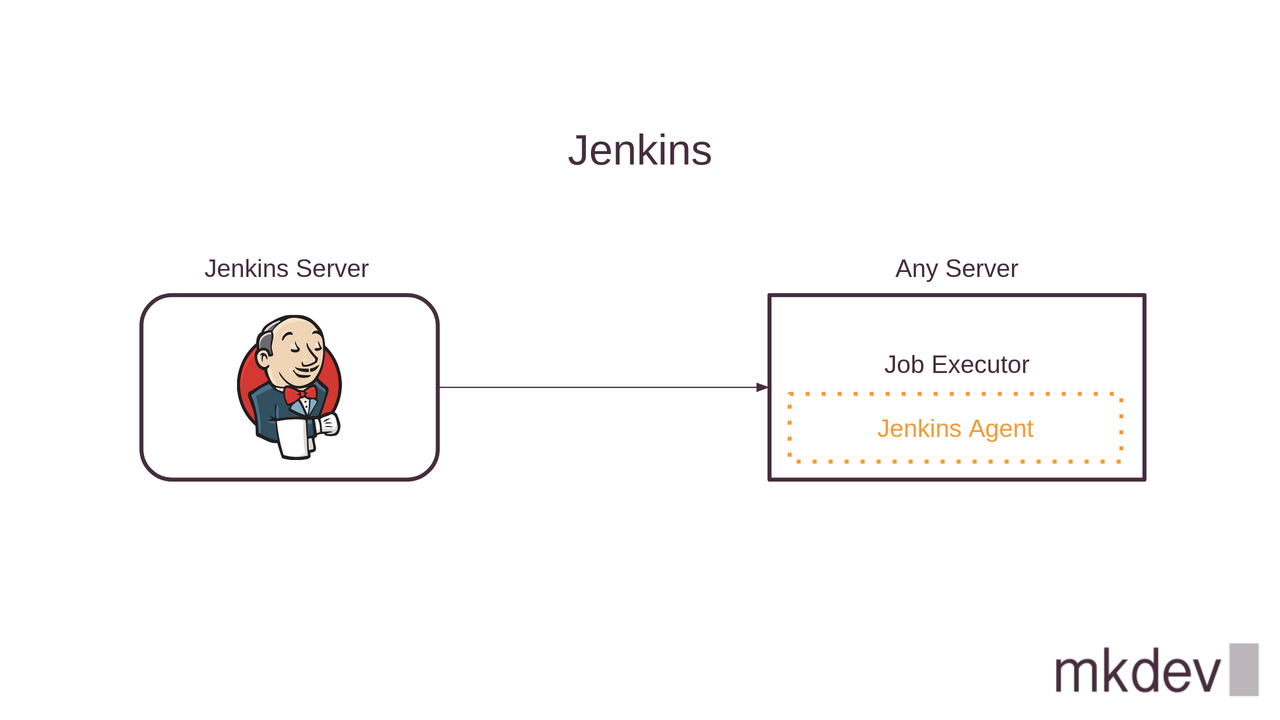

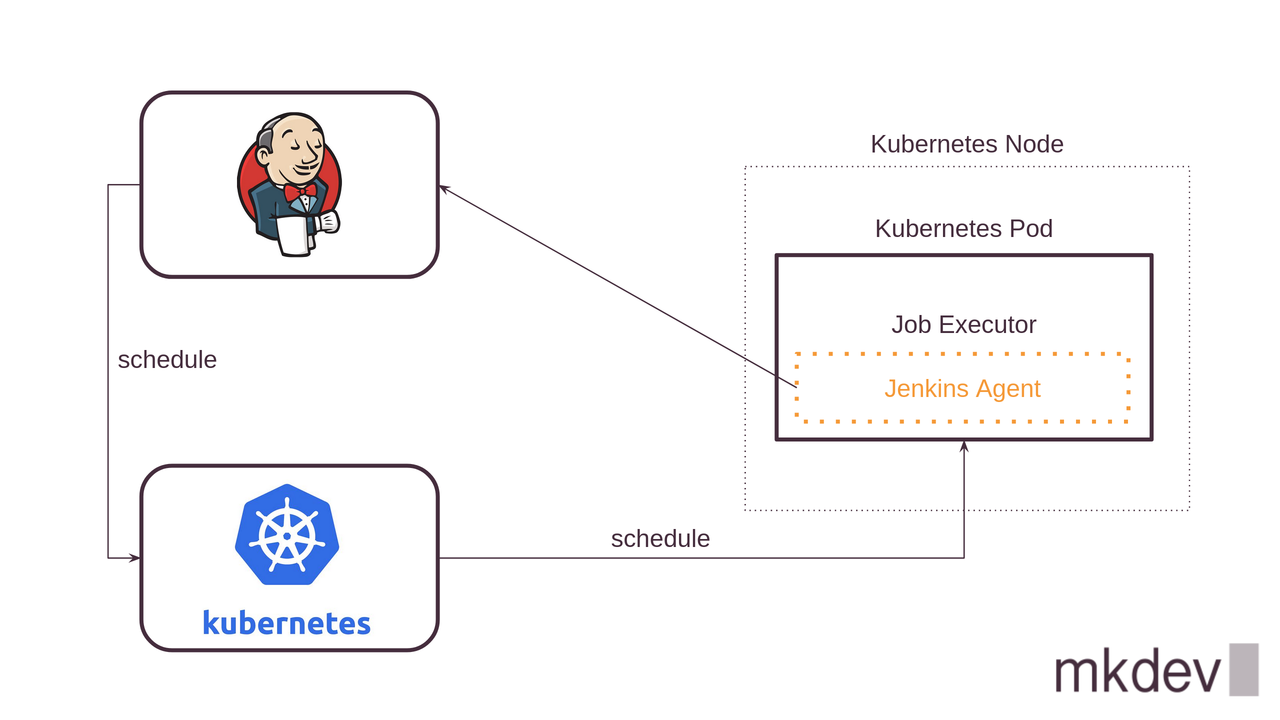

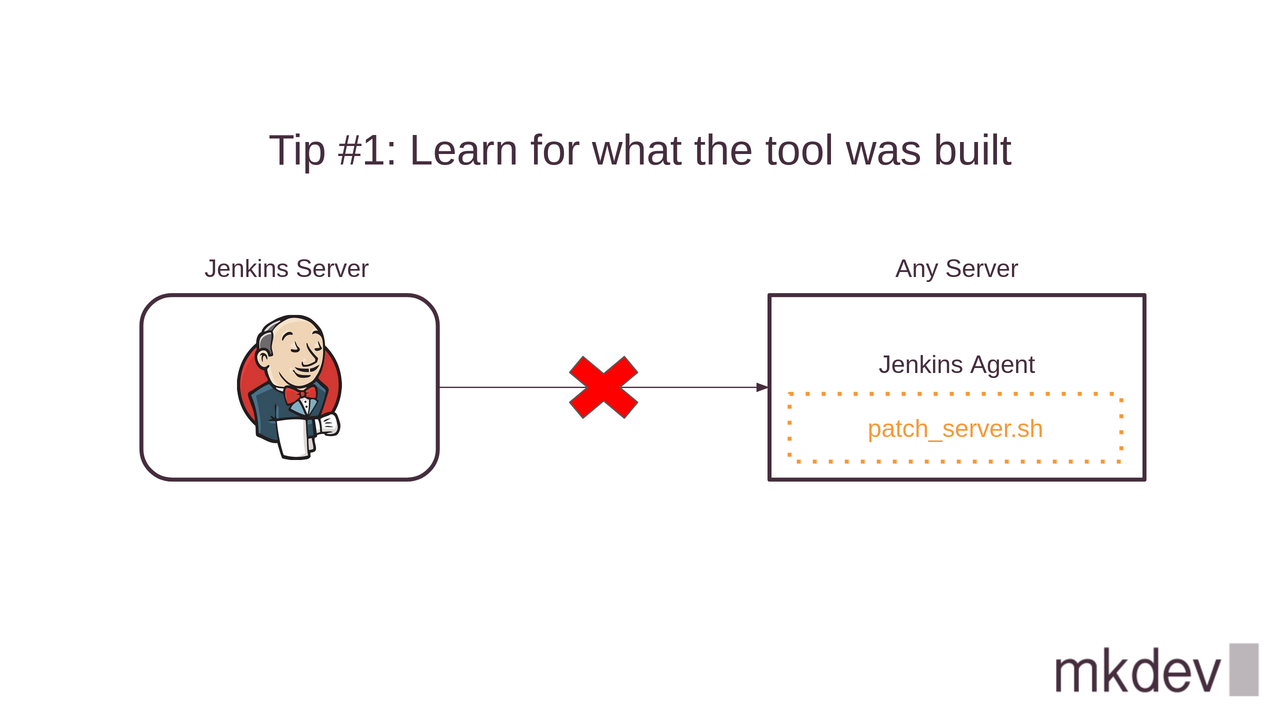

If we will look at Jenkins, the Jenkins Server is a "job scheduler", "job server" is any server that has "jenkins agent" installed and "jenkins agents" are your "job executors".

Your typical jobs inside a Jenkins "job execution system" is to build, test and deploy your code. Jenkins has a huge amount of different features and third party plugins to make running these kind of jobs especially easy and convenient. Another "job execution system" for CI/CD is Gitlab CI.

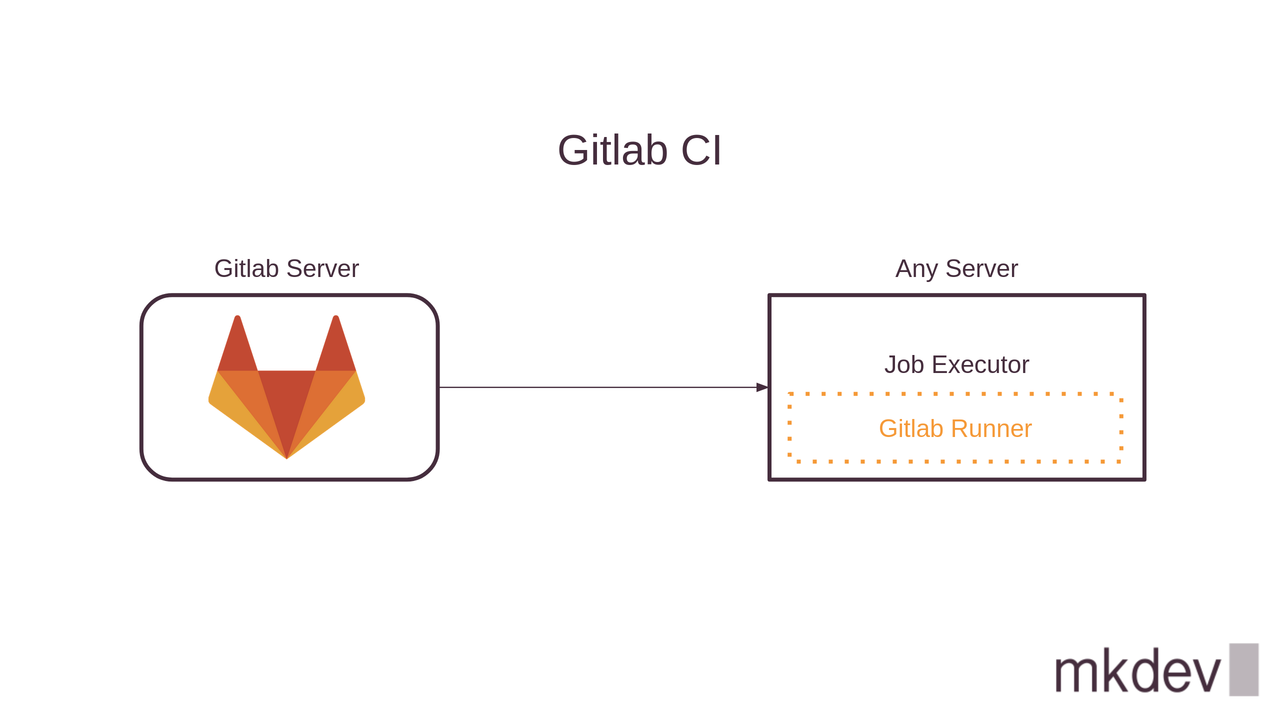

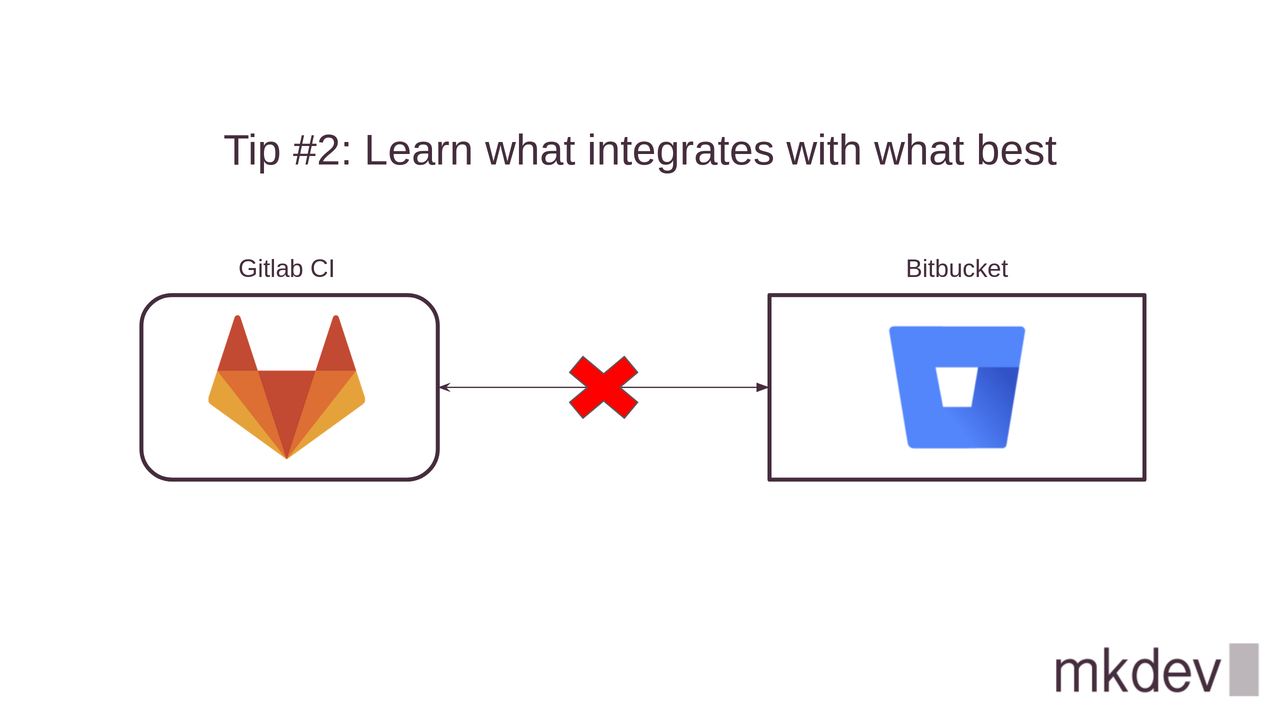

Gitlab Server is a "job scheduler", "job server" is any server that has a "gitlab runner" installed and the "gitlab runner" is your "job executor". Same as Jenkins, Gitlab was purpose built to build, test and deploy your code, so it's a great job execution system for this particular purpose.

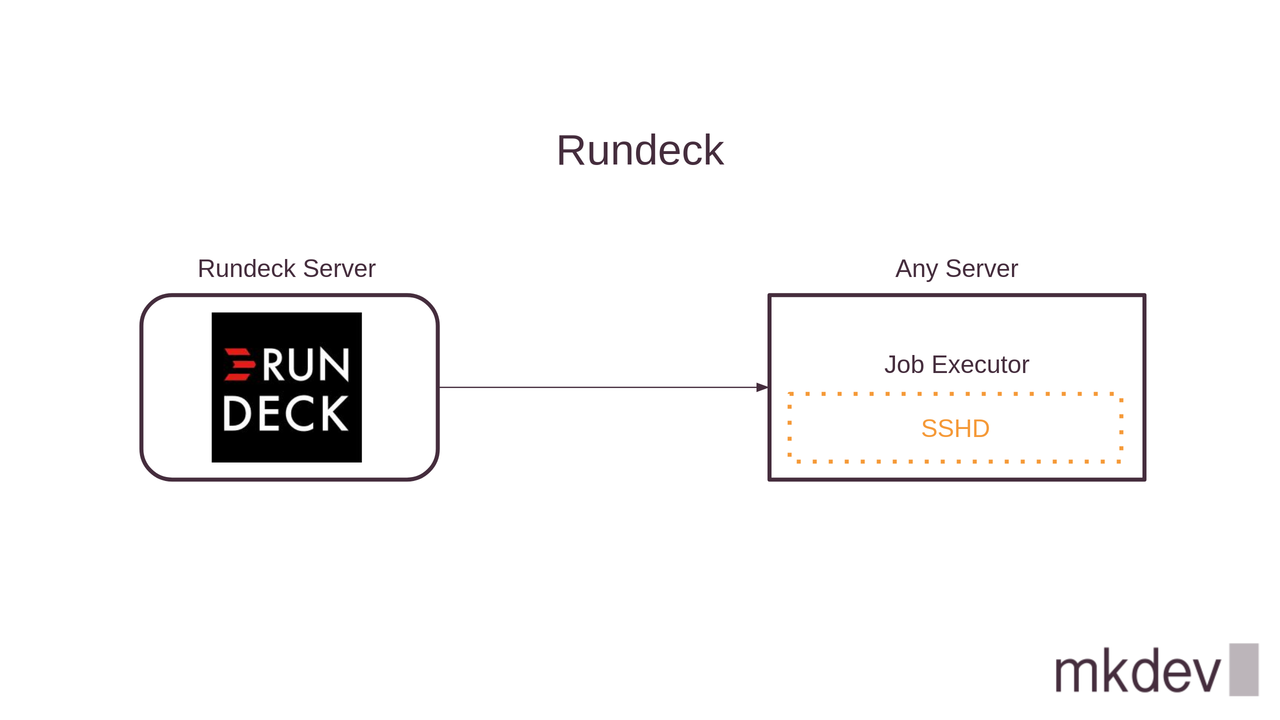

Rundeck, a popular automation tool, was not built with the CI/CD in mind. It's a more generic system, in which Rundeck server is a "job scheduler" and any other server that Rundeck can connect to is a "job server". Rundeck is perfect for doing various server automation task, like applying latest patches. It's pretty flexible, which can be both an advantage and a disadvantage.

Some other examples of a "job execution engine" could be Apache Spark, that is more on a big data and analytics and machine learning side, or Airflow, that is quite popular for really complex workflows and data processing.

It's a bit confusing sometimes to understand which tool to use to run jobs. When you start thinking about those "job execution engines", you begin to realize that beneath the different interace and slighlty different set of features they all come down to the same simple concepts.

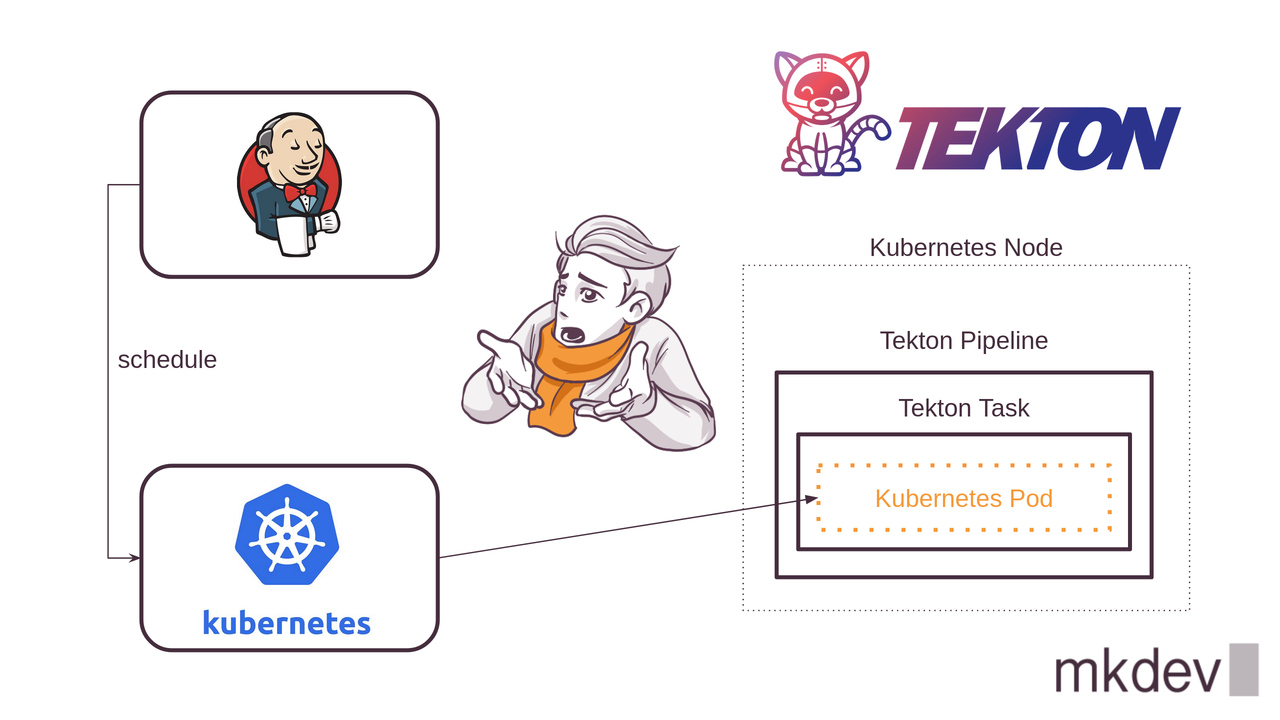

What doesn't help is the fact that under one "job execution engine" hindes another one. For example, you can connect Kubernetes cluster to Jenkins server, so that Jenkins creates new temporal "jenkins agents" as Kubernetes pods.

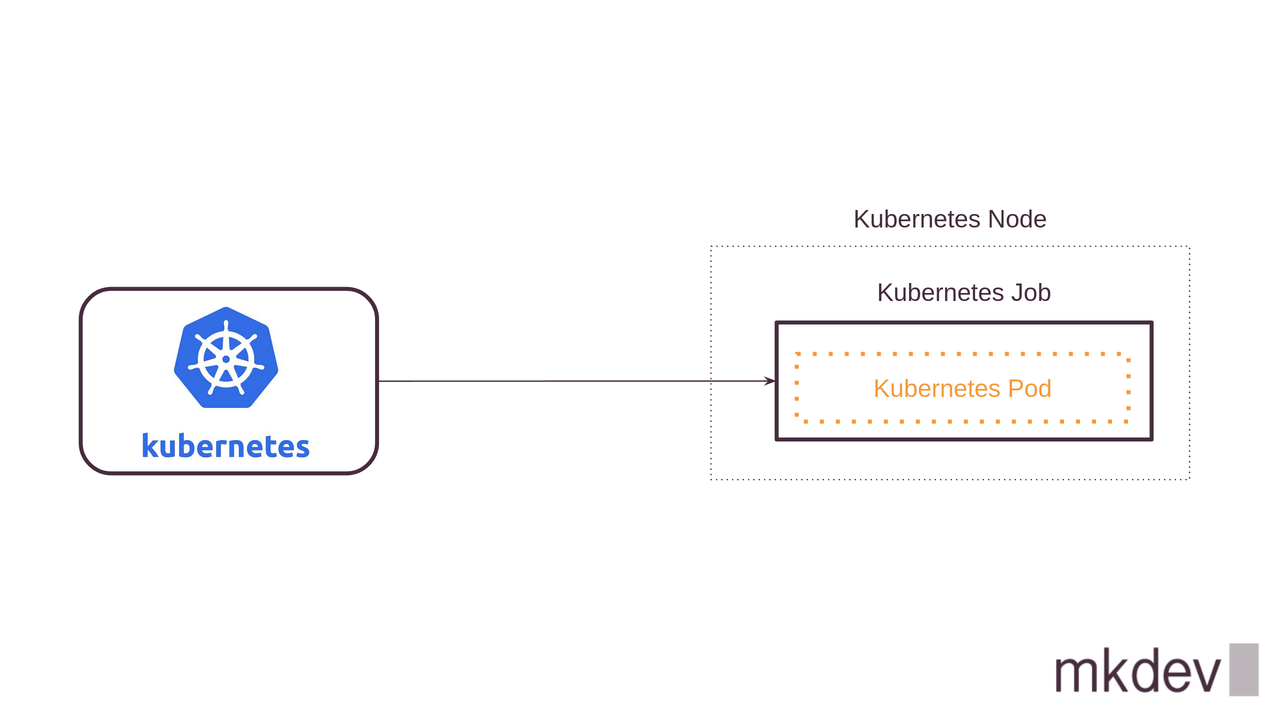

In such case, under the Jenkins "job execution" engine hides another one, called "Kubernetes Scheduler", that is responsible to schedule "pods", which are basically "job executors" on the Kubernetes nodes - which are basically "job servers".

And to make this particular case too confusing too handle, Kubernetes itself has a native concept of Jobs and CronJobs. And I did not even mention Tekton, that is a cloud native framework for building CI/CD systems.

DevOps consulting: DevOps is a cultural and technological journey. We'll be thrilled to be your guides on any part of this journey. About consulting

To sum it up, there are many many ways to run jobs these days. If I would give few tips for picking the right tool, they would sound like this:

First, try to understand for which purpose each tool was created. For example, you could totally run your data processing jobs inside Jenkins. You could also patch your servers with Airflow. But only because you can do it, doesn't mean you should. Some tools are perfect for CI/CD, others for other things.

Second, check which of the tools integrates with your existing system best. You would not use Gitlab CI if your primary source code repository is Bitbucket or Github. Similarly, if your operations team is effecient with Ansible, then check if Ansible Tower makes more sense rather than Rundeck or Jenkins.

And third, always keep the global concepts in mind. Underneath all of those tools you will find something like a job server, a job executor and a job scheduler. The web interface might be different, integrations might vary and different terminology might be used. But in the end it all comes down to the same set of basic components. Don't let the plethora of tools and tools specific features to discourage you.

Here's the same article in video form, so you can listen to it on the go: