Kubernetes Is Just an API: Let’s See It on k3s Cluster, Running on AWS Spot Fleet

Let's talk about Kubernetes.

What is Kubernetes?

Is it a complex system that interconnects dozens of different technologies, starting from an etcd cluster and requiring you to carefully pick the most appropriate networking plugin, storage layer, container runtime and many other things?

Or is it just a new standard runtime for your applications and the implementation of this runtime is just a technical detail?

One answer to this question is given by Cloud Native Computing Foundation, the organization that, among many other things, runs Certified Kubernetes Conformance Program.

According to CNCF, "Software conformance ensures that every vendor’s version of Kubernetes supports the required APIs, as do open source community versions."

Notice, that they talk about APIs, not about concrete technologies that provide these APIs.

As long as some Kubernetes distribution talks and understands the same APIs, it is still a Kubernetes.

Let's take k3s, the main hero of this article, as an example.

k3s, for one thing, does not need etcd.

Preferred way to run k3s is to either use embedded sqlite database for a single-server setups, or to use external datastore, which could be any MySQL or PostreSQL server, for highly available clusters.

Well, you can still use etcd, of course, if that's your preference, but that's not the main idea of k3s.

The main idea behind k3s is, in fact, to be the most lightweight and most straightforward to use Kubernetes distribution, while still being certified by CNCF.

The single-server control plane of k3s needs less than a gigabyte of operating system, so you can run it even on rasphberry pi. It was, indeed, created with internet of things and edge computing in mind.

We gave k3s a try at mkdev and were pretty happy with the results.

Let me show exactly how we deploy our dirt cheap, two node k3s cluster.

Disclaimer: what you are about to see is not even close to the production Kubernetes cluster. This is the simplest possible k3s installation which we only use for quick experiments and running our CI jobs. We call it "paas" not because it is a real platform as a service, but because we can. If you plan to use K3S for production workloads, do not use this example as a reference architecture.

We are using Terraform to deploy everything.

If you are not familiar with Terraform, check out the free Terraform Lightning Course.

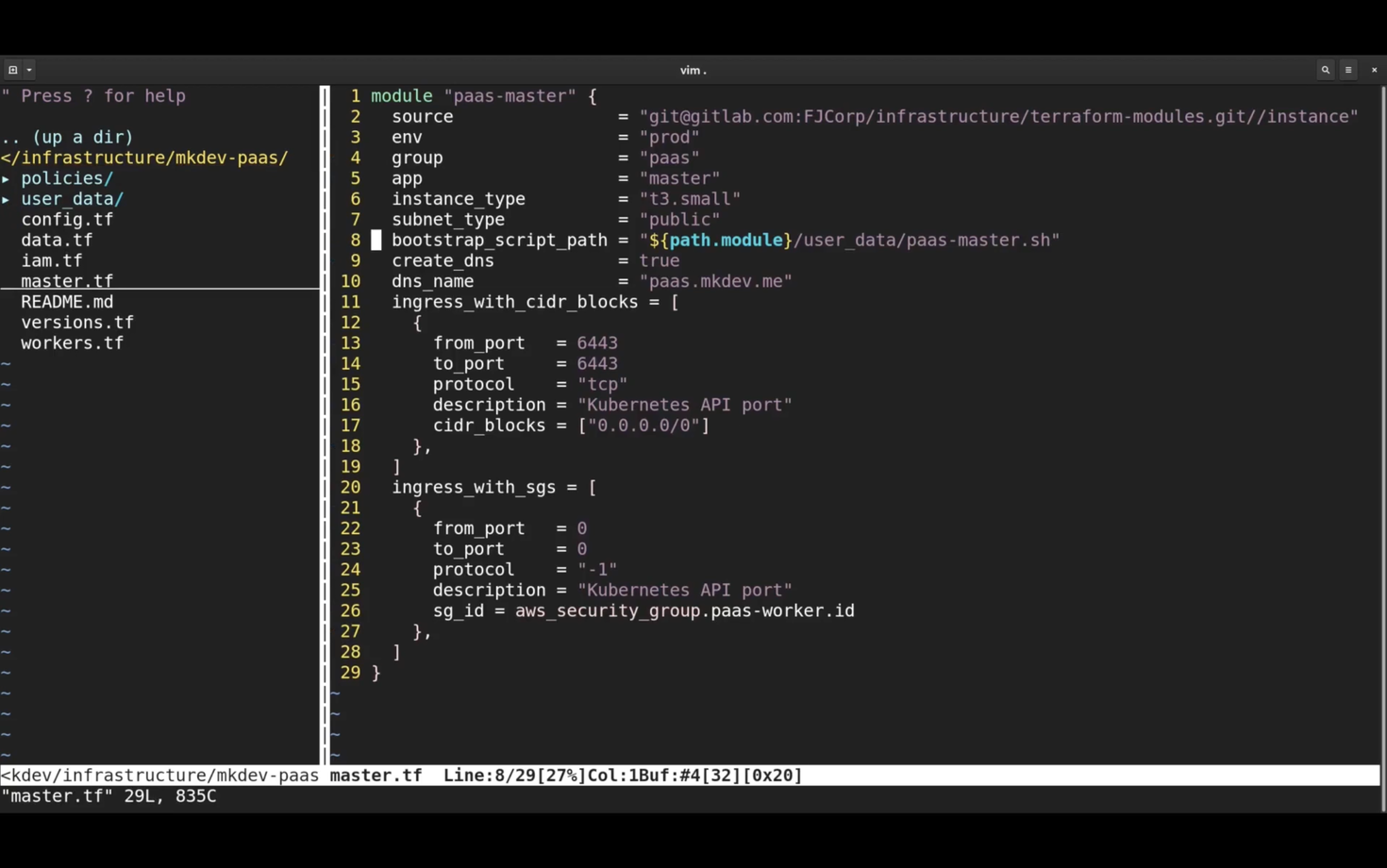

Let's head to the master.tf first.

We are using our internal Terraform module to deploy EC2 machines.

This way we standardize and automate many things, from security group creation to IAM permissions and provisioning with AWS Session Manager.

Important part here is that we are using t3.small instance type for the control plane instance. t3.small has just 2 gigabytes of RAM, but it's totally enough for k3s.

According to documentation, even half a gigabyte should be enough, but we figured that together with embedded database k3s can easily exceed this amount, at least in AWS and with CentOS.

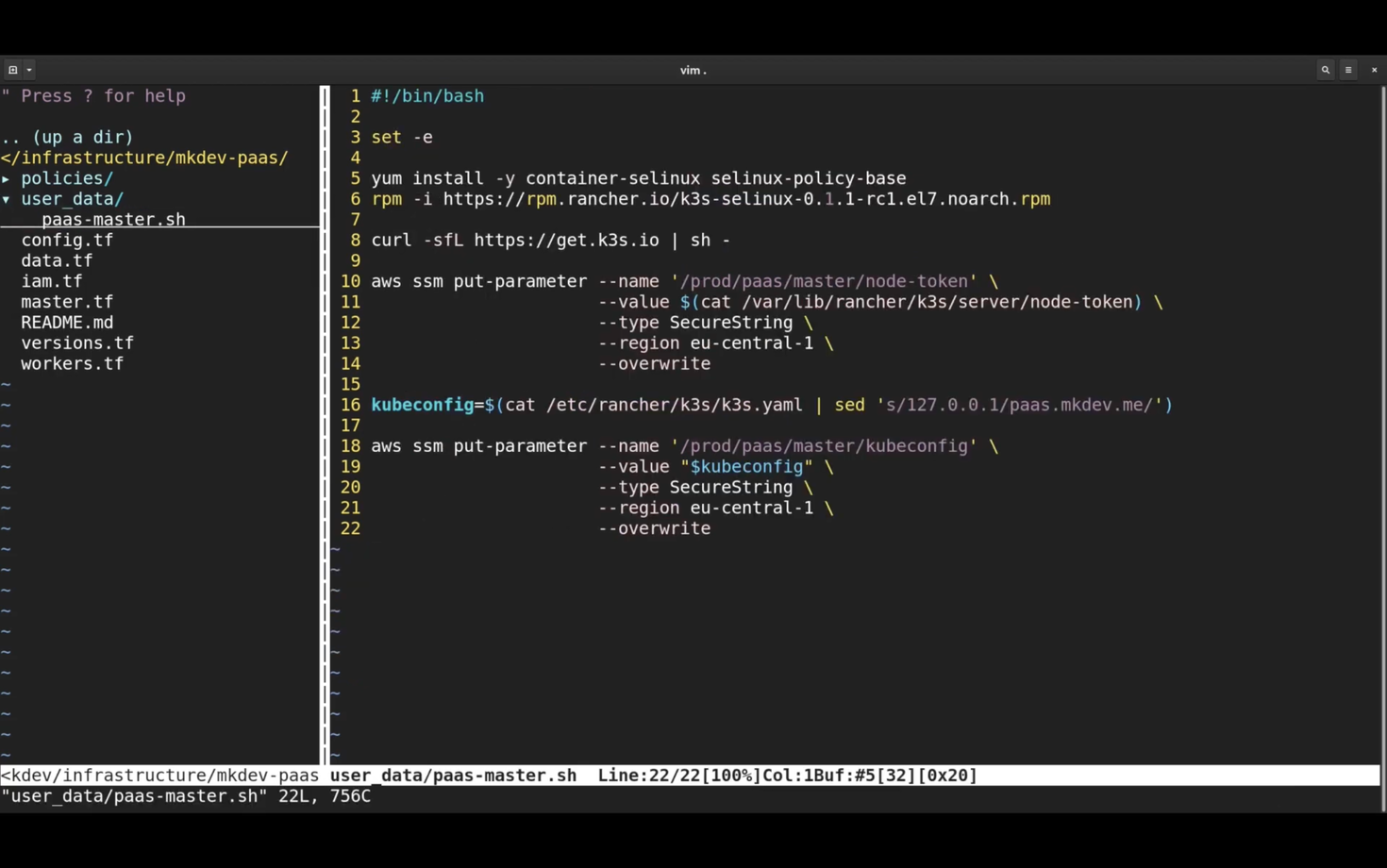

Now let's look at the paas-master.sh file.

K3S recommends to use Ubuntu, but we stick with CentOS. That's why we need to install additional SELinux policies on the first lines.

After doing that, we run a one liner that handles complete installation of k3s.

We could supply a couple of environment variables to configure things like an external PostgreSQL database and what not.

We actually tested using K3S with Serverless AWS Aurora and it worked just great - leave a comment if you want to see that setup action.

After installation is done we save two parameters to secure SSM parameters.

One is a node-token - this token is needed to register k3s worker nodes, which we will do in a bit.

The second one is a kubeconfig - we need it to be able to connect to the cluster.

Also note the sed one liner - we need kubeconfig to point to the externally available endpoint, not to the localhost.

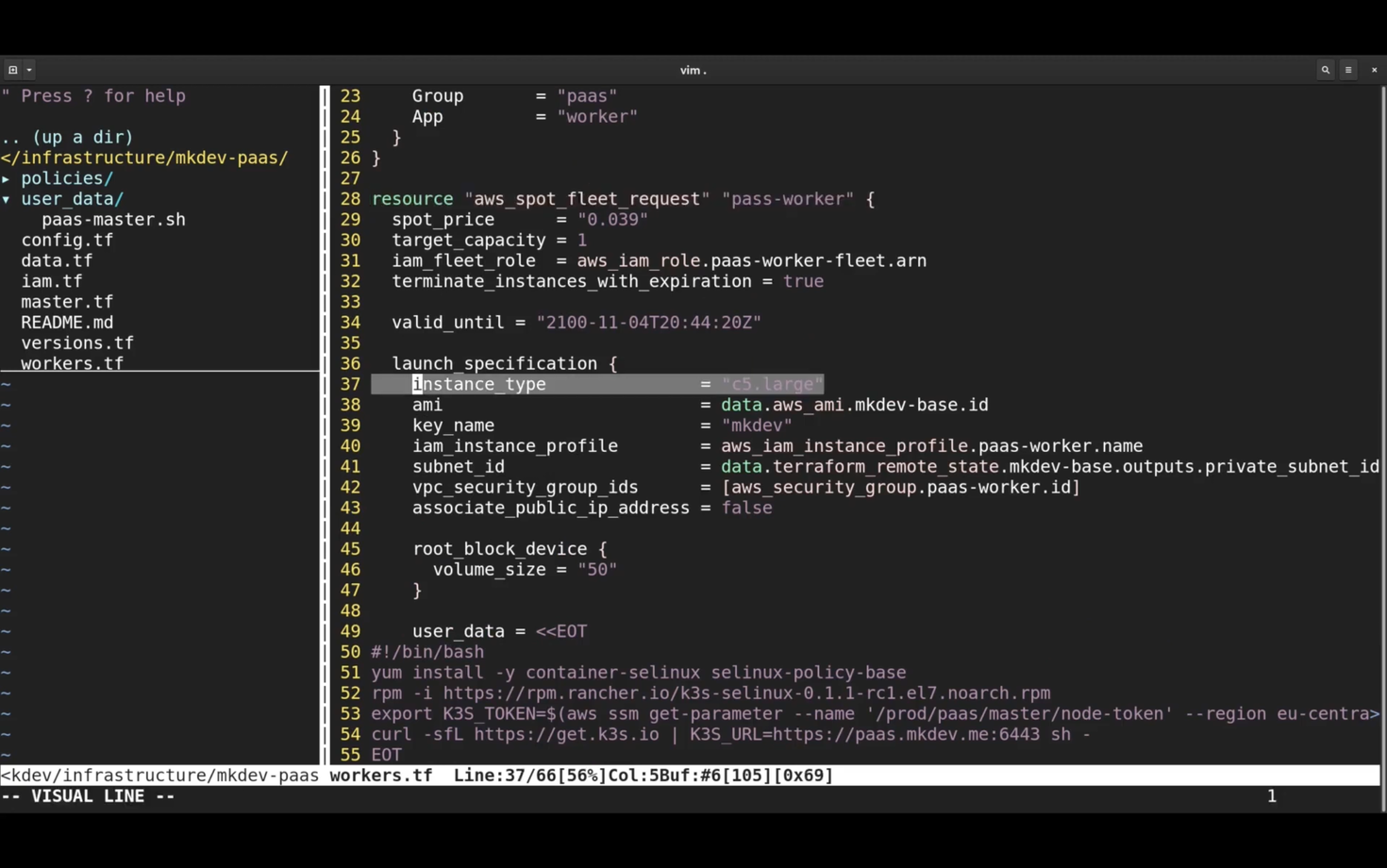

Now let's check out the workers.tf

For workers, we are using Spot Fleet - this allows us to run mighty instance types for a very low price.

Same as with masters, we need to install selinux policies for k3s.

After that, we are pulling the node token from SSM and then we use this token to install k3s.

It's exactly the same installation script, but because we set K3SURL and K3STOKEn environment variables, this script will configure k3s agent and not k3s server.

The rest of Terraform templates define required IAM permissions, so that workers are able to pull the node token from SSM.

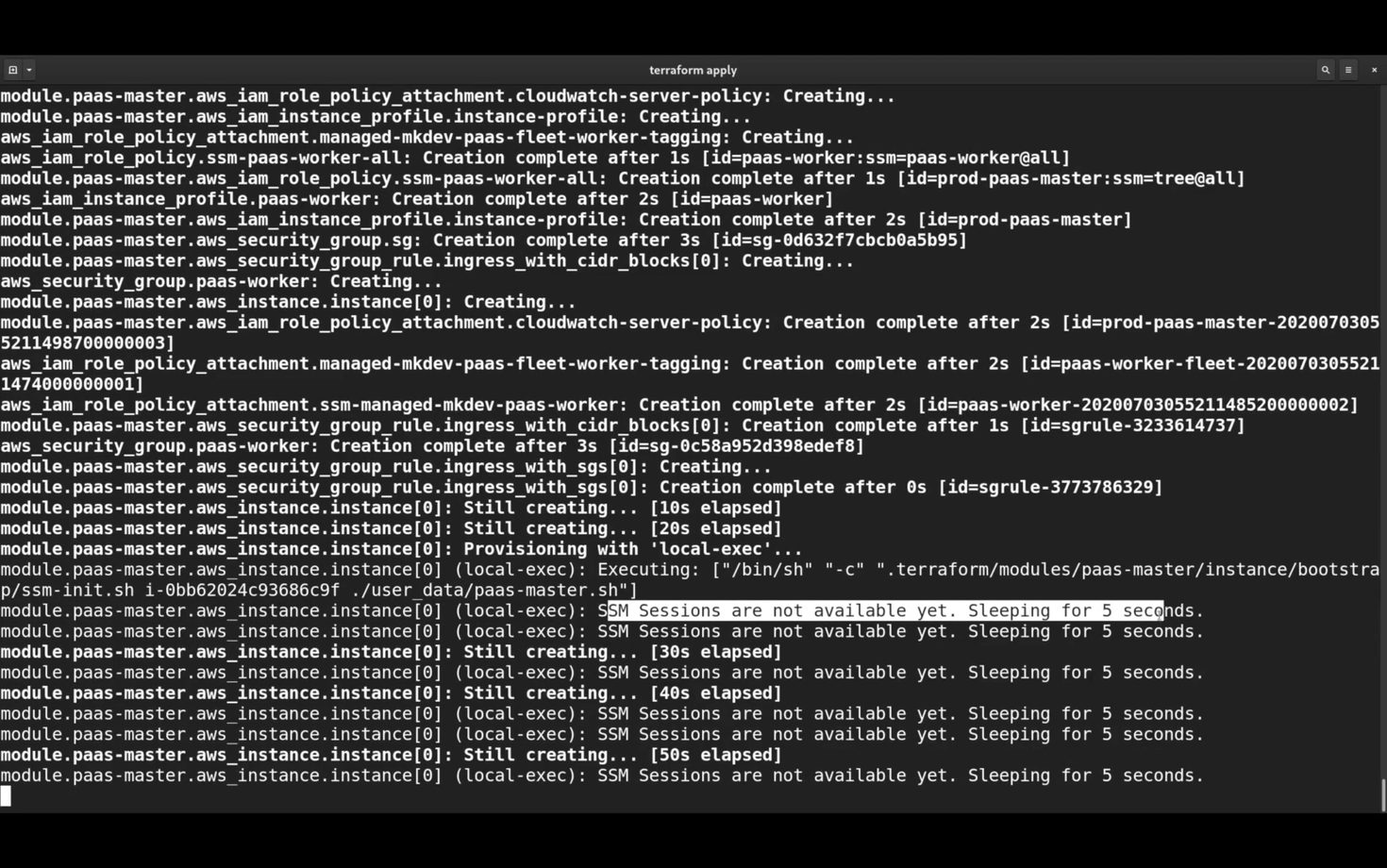

Let's give it a try and apply these templates.

This is the installation process of k3s. We are using AWS Session Manager when we need to bootstrap machines synchronously. There is a separate article about Session Manager on our website, if you want to learn more.

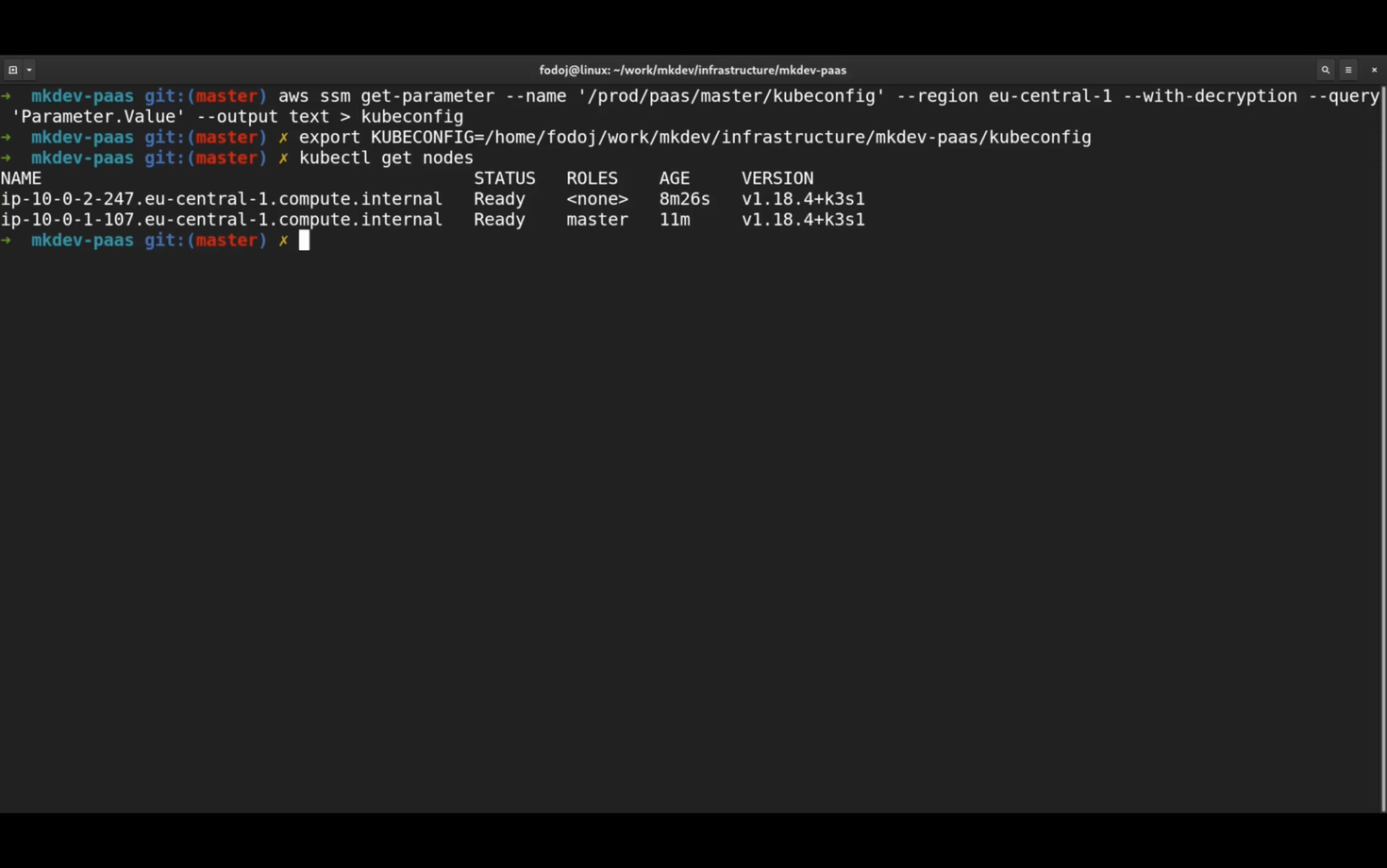

Now let me pull the kubeconfig from SSM. Note the usage of SecureString - do not use regular String Parameters for secrets.

And I will run kubectl get nodes command.

Seems like the new two-node Kubernetes cluster is up and running.

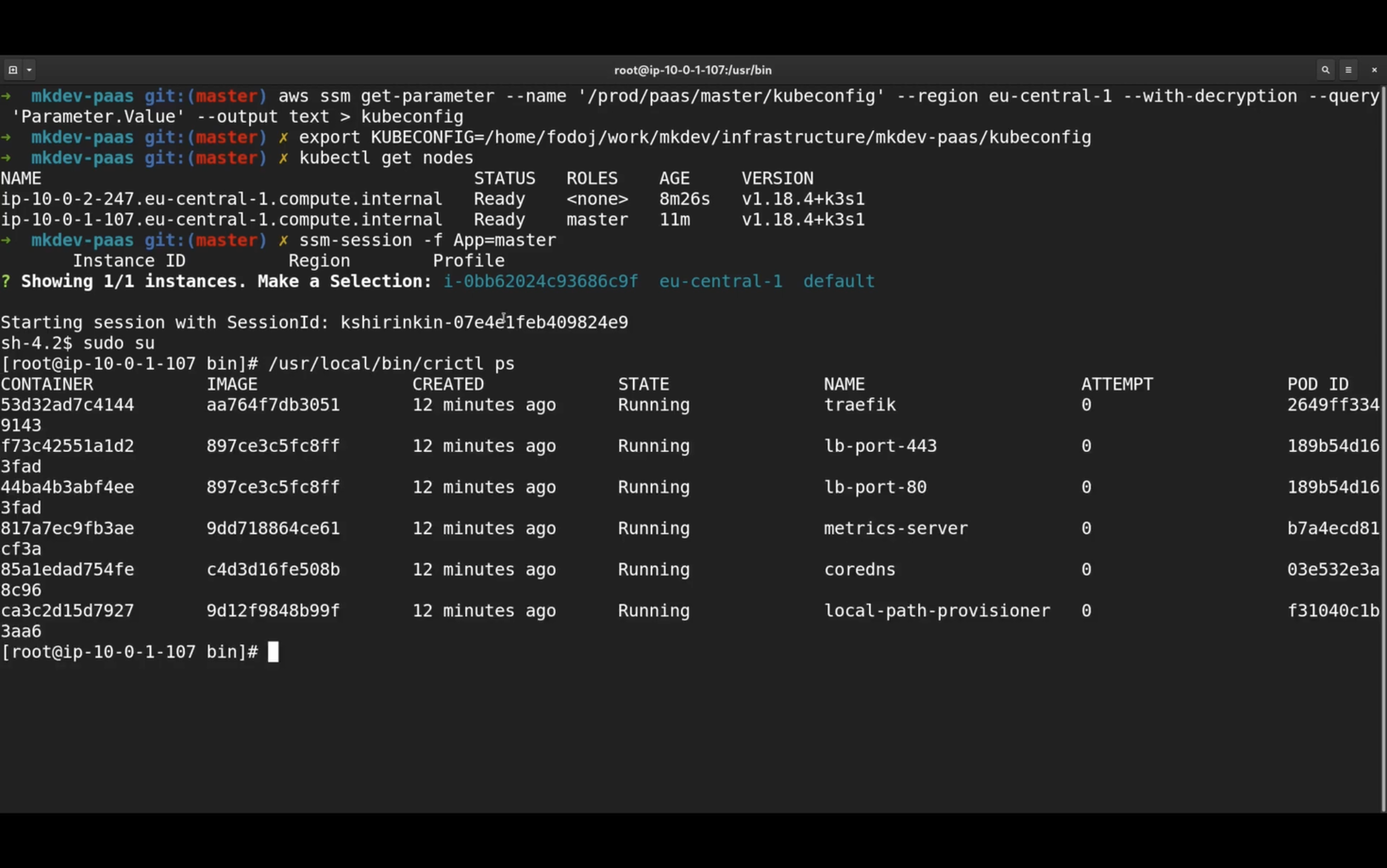

Let's login to the control plane and see what's happening there.

I am using ssm-session tool which simplifies logging into instances via Session Manager

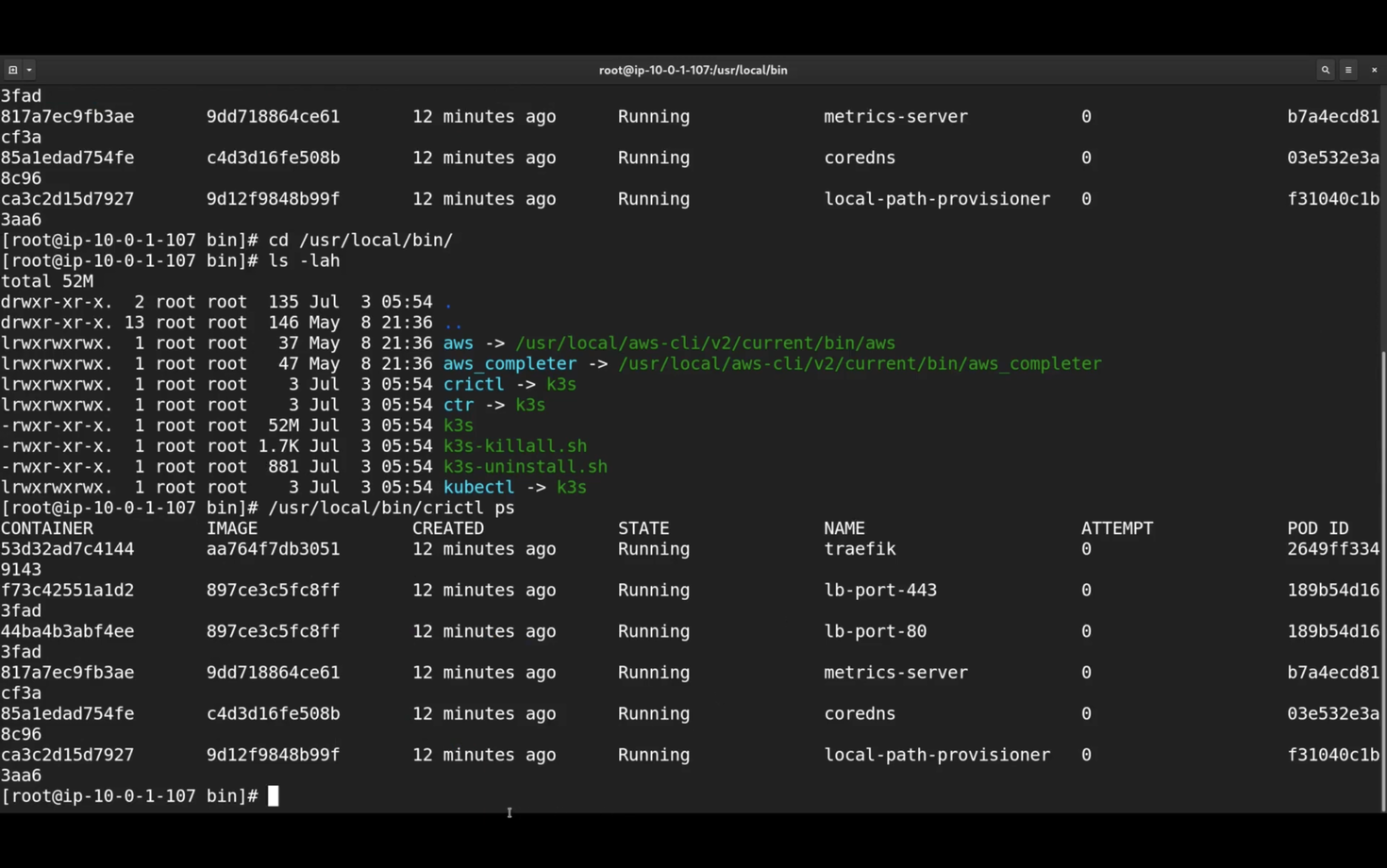

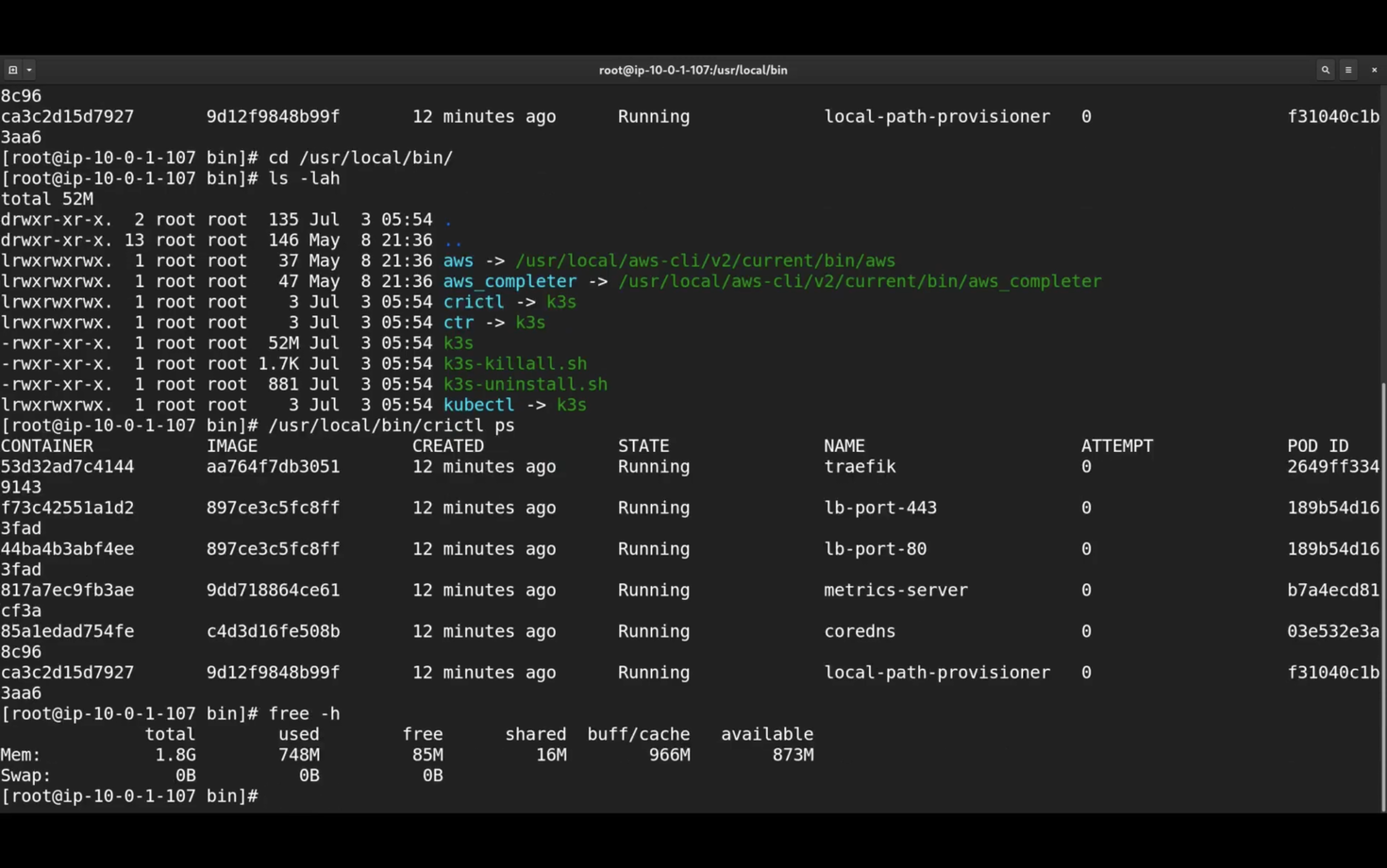

I can run "crictl ps" to see running containers. But the interesting part is that there is no dedicated crictl binary. If we check /usr/local/bin directory, we will see that crictl is a symlink to k3s, because the complete k3s is just a single binary.

Let's run /usr/local/bin/crictl ps:

k3s comes pre-packaged with corens, local storage provisioner and traefik. There is not much else running here. Everything k3s needs, like flannel and containerd, is pre-packaged inside this single binary, which makes it really easy to deploy and update.

Now let's check memory consumption.

Not bad, less than a gigabyte of RAM is used. Of course, this cluster does absolutely nothing, but it's still impressive how lightweight k3s is.

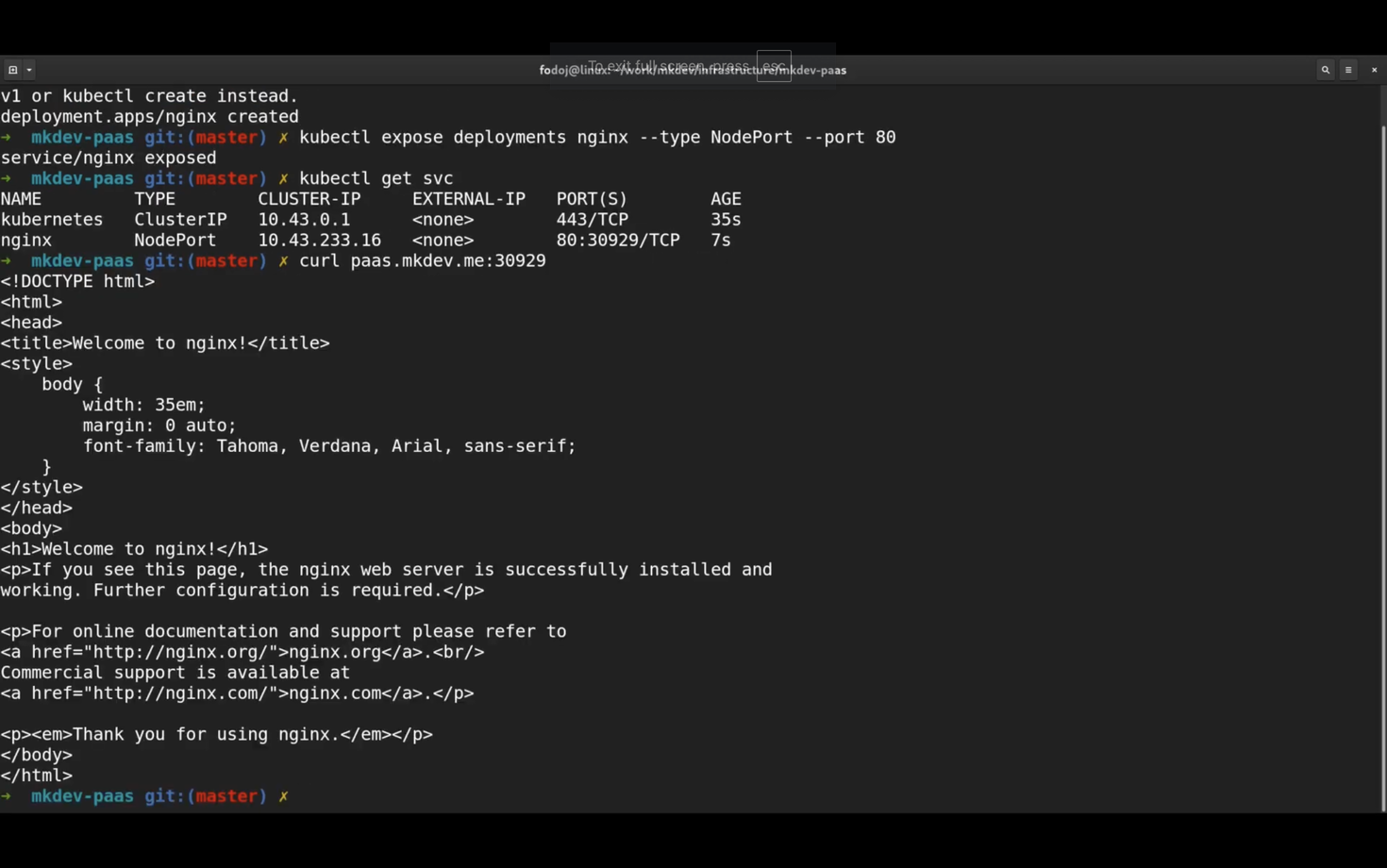

Finally, let me run some simple application there, just a small nginx deploy with a NodePort service:

Now let's access it in the browser.

And it works as expected.

k3s is a real, certified Kubernetes distribution.

It provides same APIs and you can run your applications just like you would do it on a regular Kubernetes cluster.

Of course, k3s was built with certain use cases in mind, like internet of things and edge computing and, to fit this use cases, it might at some point lack some specific drivers and features. It is an opinioned distribution, not a general purpose one.

But for the majority of applications, as well as local development and quick experiments, k3s is just perfect.

And if you add external datastore and scale up the number of control plan nodes, k3s can serve as a real production kubernetes cluster.

Here's the same article in video form for your convenience: