My company is using ChatGPT. Does the AI Act Literacy requirement apply to us?

Starting on February 2nd, 2025, the first two tranches of the EU's AI Act went into force, and with that businesses must prioritize AI compliance and governance to meet new regulatory standards. Besides targeted restrictions on prohibited AI (such as subliminal messages, inappropriate social profiling and other AI systems), a rather broad "AI Literacy" requirement also became law.

Understanding the AI Literacy Requirement in the EU AI Act

What does this AI Literacy requirement mean for your business? AI literacy is now a core part of AI governance, ensuring companies meet AI regulatory compliance requirements. If your staff or services involve any AI system, then the new AI Act law requires your business to

ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf ...

Source: REGULATION (EU) 2024/1689, Article 4

We're only using ChatGPT (or Gemini or Le Chat or Perplexity or ...) to help us be more efficient at work, you may object. Surely that's not enough to make us subject to the AI Act?!?

Not so fast! The AI Act provides a structured AI legal framework that defines what qualifies as an AI system under EU law. A literal reading of what is an AI system and what kind of usage of AI systems is in-scope seems pretty clear. From the definition in Article 3(a) of the AI Act,

‘AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments;

This definition implies that AI systems subject to the EU AI Act must have the following key attributes:

- Operates with autonomy

- Exhibits adaptiveness after deployment

- Generates predictions, content, recommendations, or decisions

- Functions based on explicit or implicit objectives

- Influences physical or virtual environments

Let’s break down each attribute individually one-by-one to see if AI chatbots meet the AI Act definition?

Do AI Chatbots operate with autonomy?

It often helps to provide additional context, but users do not need to give step-by-step instructions as in a programming language for AI chatbots to work. Check on that criterion.

Do AI Chatbots exhibit adaptiveness after deployment?

Also check: the almost constant updates of AI chatbots in terms of both more recent training data, user-based feedback mechanisms, access to almost real-time web sources provide a level of adaptiveness unheard of in standard, non-AI software systems.

Do AI chatbots generate content, recommendations, decisions or predictions?

Evidently yes, because generation is easy to check by simply opening your Chat GPT client, and sending a prompt. This is an easy “check”. But what about the explicit or implicit objectives?

What are the Explicit and Implicit Objectives of AI Chatbots?

In contrast to standard software, modern chatbots are trained to satisfy a mix of implicit and explicit objectives.

First, the basic machine learning task of the large language models (LLMs) that power AI chatbots is accurately predict the next word or words in any text in its training data set, be it Wikipedia entries, research articles, blog posts, computer code (this description applies to causal LLM architectures. A slight variant is masking architectures).

Second, chatbots also include a large set of explicit text-instructions on what to answer, what not to answer, and how to provide answers in the so-called "system prompt". Lastly, most modern chatbots take into account which of several possible responses humans prefer using a technique called "human feedback reinforcement learning".

This means that AI chatbots clearly operate based on both explicit and implicit objectives. Their training, system prompts, and feedback mechanisms shape their responses, making them fit the AI Act’s definition of an AI system.

Can AI chatbots outputs influence physical or virtual environments?

Here AI chatbots differ from other AI systems in that they run in copilot mode, meaning that a human is constantly supervising outputs, so that the only way for outputs to escape from the chatbot to affect the outside virtual or physical world is via the human user. Yes, people are already integrating LLMs directly into other IT systems, but such usage is out-of-scope for this article, as it may make your company responsible for much more than just the AI Literacy requirements of the AI Act.

So AI chatbots used in a business, even in copilot mode, seem clearly to be "AI systems." But I still shared your potential skepticism. Isn't ChatGPT (or Le Chat or Gemini or Perplexity becoming as wide-spread as Google? In fact, Google, Bing and DuckDuckGo all give you the option of getting ChatGPT-like answers rather than the usual list of relevant websites.

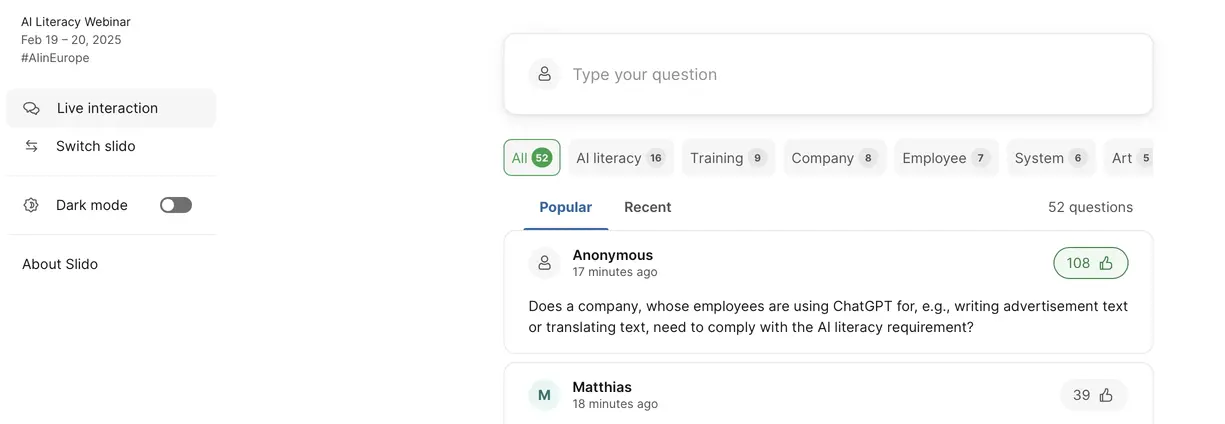

I can share a direct answer to this question from you coming straight from the EU's AI Office. During a webinar on the AI Literacy requirement, the most upvoted question was precisely ours:

The answer from Irina Orssich, Head of Sector AI Policy at the EU Commission?

Absolutely.

There we have it. Both a close reading of the AI Act and a direct response from an AI Policy Head at the EU commission confirm that, as of February 2nd, 2025:

Using ChatGPT or any other AI chatbot for work purposes means your company is required to satisfy the AI Act’s Literacy requirements.

But Ms. Orssich didn't stop there, and neither should businesses. The AI Literacy requirement does indeed represent a "stick," but it comes with "carrots" (if the "carrot-and-stick" metaphor isn't familiar to you and you dislike carrots, then imagine you are a rabbit instead).

In our next article, we’ll guide you and your company step-by-step through the AI Literacy requirements, ensuring AI compliance while strengthening corporate AI responsibility. This will help you reap even more benefits from AI chatbots while better managing the associated risks such as data leaks and unreliable answers.

But if you're tired of reading — we already deliver AI Literacy Training for EU AI Act Compliance that does exactly what the EU AI Act demands. Clear, role-specific, tailored to your tools and workflows. Skip the theory and get your team compliant today.

Article Series "EU AI Act Explained by Paul Larsen"

- What is the EU AI Act, and why does it matter?

- When does the EU AI Act come into force, and what does this mean for your business?

- My company is using ChatGPT. Does the AI Act Literacy requirement apply to us?

- The carrot and stick of the EU AI Act's literacy requirements: Benefits, Compliance, and Risks