When does the EU AI Act come into force, and what does this mean for your business?

The EU's AI Act became official EU law on August 1st, 2024, but the first measures around prohibited uses of AI and AI literacy programs for in-scope businesses come into effect on February 2nd, 2025.

In this article, we'll help you figure out if and how your business is in-scope for the AI Act. By the end, you'll have an understanding of how the AI Act is relevant for your business, and what you need to do when to be compliant. The penalties for non-compliance can be significant, with maximum fines of up to 7% of a business’s global, annual revenue or 35 million EUR, whichever is larger.

What is AI?

I've argued in workshops that artificial intelligence isn't one thing, it's many things. The AI Act takes the same approach, referring to an AI as a "family of technologies."

Still, we need a definition of what's being regulated, which isn't AI in general, but "AI systems." An AI system is defined as (emphasis added)

a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

Let's break down the parts we highlighted in bold one by one, making distinctions along the way with normal, non-AI computer systems.

The autonomy characteristic by itself does not distinguish AI from other software. That a computer will perform tasks without human intervention is the reason businesses have invested in software and hardware for decades. Nevertheless, AI systems are capable of higher degrees of autonomy than regular software, due to the fact that it "infers" outputs, which the second highlighted term from the above definition.

The mention of AI systems "inferring" outputs from inputs is a key characteristic of AI compared to normal software. Normal software can also be extremely complicated, but unlike with AI, each operation can be broken into explicit steps of input and output, with explicit rules of how to create output from input. As a consequence, AI systems can map a wider variety of inputs to what it considers the correct output than normal software.

If you're a sci-fi fan, you may value the explicit wording machine based system, which would rule out cases like the human-mutants in Minority Report that predict who will commit crimes before they are committed. By the way, if this task were being carried out by a machine, it would be classified as "prohibited" AI, as we'll see below.

What businesses are in scope for the AI Act?

Let's start with which businesses are excluded. If your business is neither providing nor using AI in any sense, then you can safely ignore the AI Act. What's not so safe, however, is the certainty that your business isn't providing or using AI, as you can appreciate from the previous section defining AI systems.

An easier exclusion is natural persons (as opposed to legal persons such as corporations) who use AI in a non-professional capacity. So no need to register your Christmas-card writing OpenAI Assistant or self-developed AI bot that reminds you to do yoga with any regulatory agency.

An additional easy one is pure research institutions. Research and development work is also out of scope for the AI Act. With some caveats we'll mention below, this exclusion also holds for businesses developing AI before they put their AI on the external market or in internal service.

The final excluded group was the source of significant debate and changes from the first draft to the final: the defense industry.

These exclusions give us examples of how the AI act aims to follow a "risk-based" approach that enables (and in some ways encourages) innovation while managing the down-side of when AI goes wrong. Pure research and personal use of AI tools aren't in general a risk to health, safety or fundamental rights.

Let's now set out what types of businesses or government agencies are potentially in scope.

Providers of AI are companies that develop and make available AI tools. It doesn't matter whether the AI system provided is used internally within a company or are external customers.

Deployers of AI systems are companies that use AI within their business's processes or products. If a deployer of AI makes material changes to the way the provided AI functions, it becomes responsible for meeting provider requirements for those changes.

We'll skip over the other types of business activity that are in-scope for the AI Act in this article, namely distributors and importers of AI systems.

Before getting into the details of what AI systems are regulated and how, what role does geography play? With some caveats, your business is geographically in-scope for the AI Act if you are based in Europe, or your AI services are being used in Europe.

My business is in scope for the AI Act. Now what?

The AI Act first became official law on August 1st, 2024, but its requirements for businesses and governmental agencies go into effect in different batches, with the next batch coming up on February 2nd, 2025 covering specifically prohibited AI systems and quite broad AI literacy requirements.

The AI Literacy requirement casts a wide net

Unlike the majority of the AI Act, the AI Literacy requirement applies to all businesses providing or deploying AI. It's tempting to think that using an AI service doesn't make your company a "deployer" of AI, yet the definition in Article 3(4) leaves little doubt than any usage of AI in a professional capacity makes your business a "deployer," and hence required by Article 4 to prove some form of AI Literacy training to employees.

The precise form of training is not specified by the AI Act. Instead the guidance is to ensure, to the best of your company's ability, that the training is fitting to the context of the AI you are using and your employees' backgrounds.

The AI Act's risk-based approach

To figure out what actions beyond AI Literacy your business needs to take when, it'll help to first understand better the "risk-based" approach mentioned above.

The risk classifications of the AI Act fall into two main areas, which we can loosely call the "what" risks and the "how" risks, where the “what” risks relate to the task or tasks the AI system is set to do, while the “how” risks focus on how the AI system interacts with its end-users (transparency risk) or how general the AI's capabilities are (systemic, general purpose AI risk).

The "what" risk buckets of the EU AI Act

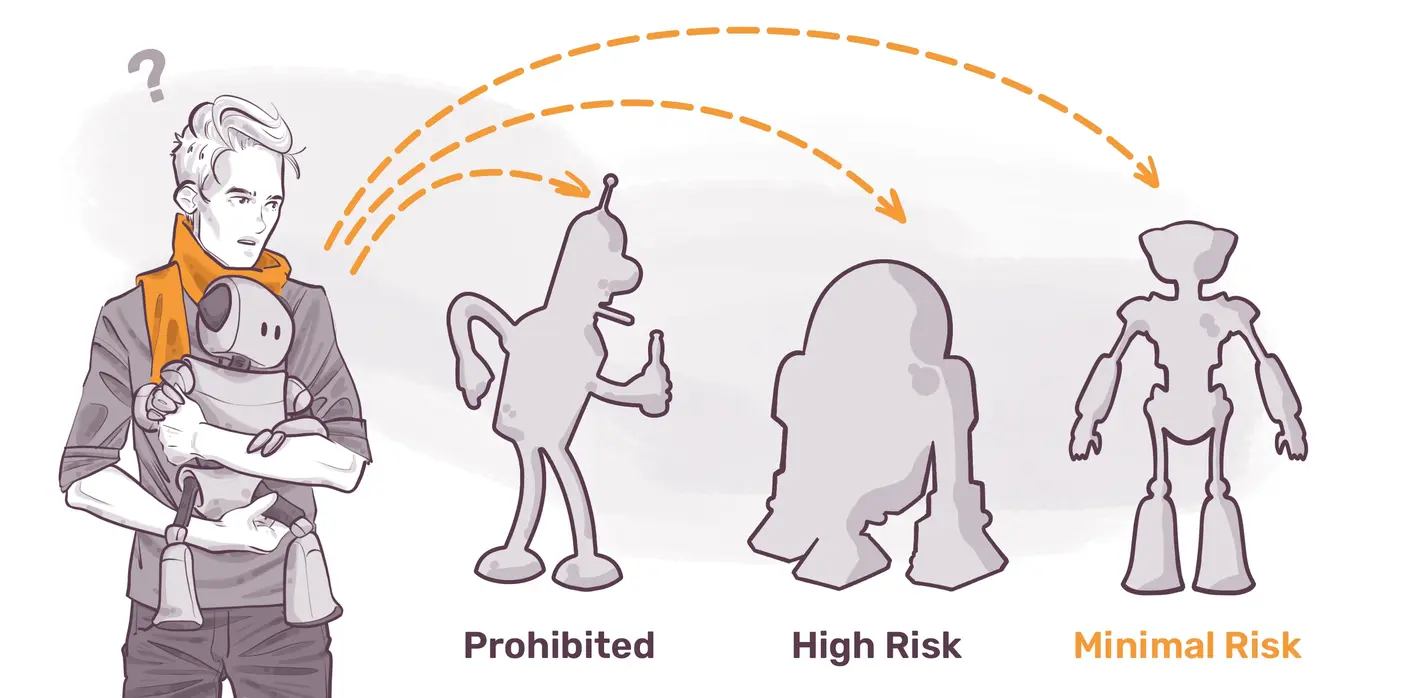

The “what" risk categories split according to how bad an AI system gone wrong would be for health, safety or fundamental rights. The categories are "prohibited", "high-risk" and "minimal-risk".

We'll give an overview of each of these "what" risk categories below, but bear in mind that the process of determining which AI system fits into which risk bucket can be challenging.

Prohibited AI systems are ones that are deemed incompatible with the health, safety or fundamental rights of EU residents. Examples include

social scoring outside of the context in which the data was gathered, like Kreditech using Facebook feeds to grant or deny bank loans,

manipulative AI, such as generating fake reviews on Amazon or employing AI to create subliminal messages (you are on your own to find links yourself, as I don't want to advertise such services here),

predictive crime AI, like Palantir's predictive policing AI system used in New Orleans, and

remote identification of people except under certain narrow, law-enforcement situations.

High-risk AI systems are those where jobs being done by AI systems have recognized potential for both improving life for EU residents, but also present risks to health, safety or fundamental rights. Examples of high-risk AI include

AI bank loan decisions, as access to finance is a protected right,

AI decisions on access to health or life insurance,

using AI for employment decisions such as hiring or promotions, and

AI used in product safety or infrastructure.

The final category based on what the AI system is doing is minimal-risk, which means that the potential downsides of using the AI system are sufficiently small that regulating these would stifle innovation without providing sufficient additional protection to EU residents.

Examples of minimal-risk AI systems are

email spam filters,

fraud detection, and

narrow, procedural AI applications, like extracting structured data from free text.

Why does this "what" risk classification matter? Because the actions required by your business differ according to your AI system being prohibited, high or minimal risk. High-risk AI systems have the majority of required actions associated with it. Prohibited AI systems are to be taking from the market by February 2nd, 2025, and minimal-risk AI systems accordingly require minimal business action.

The "how" risk buckets of AI Act: transparency and general-purpose AI

The "how" risks fall into two groups: transparency risk and systemic risk from general purpose AI, of which generative AI (GenAI) is the prime example.

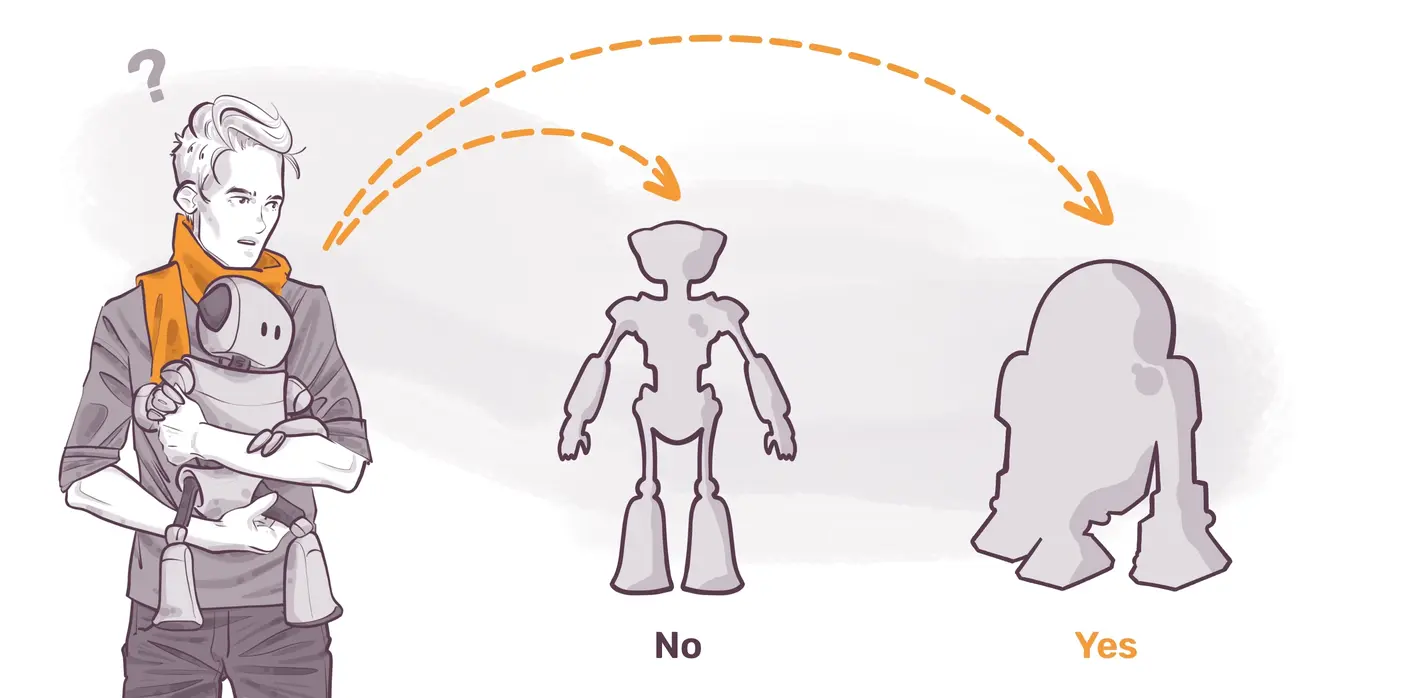

The how-risk for transparency is perhaps easier to understand. Independently of what the AI system is tasked to do, is the mode of interaction with end-users such that they could confuse AI produced outputs for human? If users could reasonably mistake your business's AI output for human-created output, then this AI system is subject to transparency risk requirements.

If your AI system does indeed have transparency risk, then the AI Act sets out measures for you to take by August 2nd, 2026 to ensure compliance.

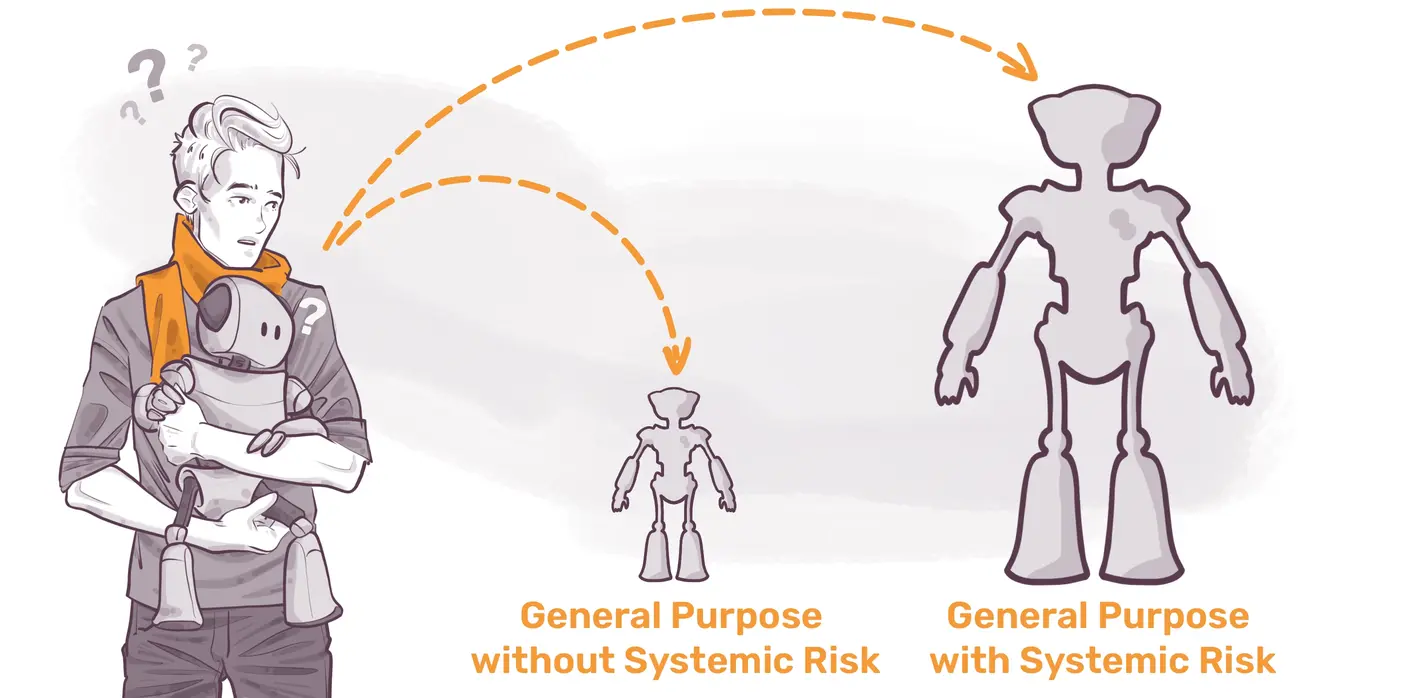

The wording about general purpose AI was missing from the draft of the AI Act published in 2021, but with the viral spread of ChatGPT and its Generative AI cousins in late 2022, this flavor of AI now has its own set of requirements.

The novelty compared to other AI is that general purpose AI can solve numerous different tasks, often better than human level, without requiring task-specific adaptations, such as fine-tuning on task-specific data. This power could present a systemic risk, however, as failures modes of a single model can propagate to a wide variety of domains. Maybe it's unimportant that one of the best large language models in 2024 couldn't count the number of "r"s in the word "strawberry", but it's not hard to imagine how critical errors could cascade across multiple systems.

It's an area of active research to better understand how current generative AI models can multitask so well, so not surprisingly it is difficult to determine if such a model poses systemic risk or not. The main approach of the AI Act is to take the computational power required to train the AI model as the determinant of having systemic risk. If the compute required to train the model exceeds 10^25 floating point operations (also known as FLOPs), then by Article 52(2) its general capabilities are considered to have systemic risk.

General purpose AI models with and without systemic risk both have compliance actions to take starting August 2nd, 2025, but the greater burden is on those with systemic risk.

What your business needs to do when: a summary

Now that you have an overview of the most important definitions and concepts from the EU's AI Act, let's summarize what your business has to do when

February 2nd, 2025: The rather broad AI Literacy and prohibited AI systems requirements go into effect.

August 2nd, 2025: Requirements for general purpose AI enter into force.

August 2nd, 2026: All remaining requirements, including high-risk (with one exception below) and transparency-risk AI systems go into effect.

February 2nd, 2027: Requirements for high-risk AI systems that are such because they are embedded in already regulated products enter into force.

Dr. Paul Larsen, the Head of AI + Data at mkdev, is a data scientist with more than 10 years of experience in large corporates (Allianz Insurance, Deutsche Bank) and startups (Translucent). He is the creator of trainings for industry and academia on risk management and AI, and has worked directly with national regulators.

We are covering the entire scope of the AI Act in a series of articles and training sessions, and can make sure your AI systems are compliant and future-proof. Book a call with our Head of AI today to get a tailored compliance assessment and stay ahead of the AI Act. Schedule a call

Article Series "EU AI Act Explained by Paul Larsen"

- What is the EU AI Act, and why does it matter?

- When does the EU AI Act come into force, and what does this mean for your business?

- My company is using ChatGPT. Does the AI Act Literacy requirement apply to us?

- The carrot and stick of the EU AI Act's literacy requirements: Benefits, Compliance, and Risks