The tool that really runs your containers: deep dive into runc and OCI specifications

There are many ways to run containers, all with good use cases.

Best known one is, no doubt, Docker, which sky-rocketed the container adoption. There is also Podman and Buildah, which I explained in a dedicated Dockerless series, showed in Dockerless video, and now I teach on the Dockerless course.

DevOps consulting: DevOps is a cultural and technological journey. We'll be thrilled to be your guides on any part of this journey. About consulting

Once you are serious about running your containers in production, you have multiple other choices, containerd and CRI-O being two examples. These tools are not the most developer-friendly ones. Instead, they focus on other areas, like being the best container manager for Kubernetes, or just being the fully featured runtime that can be further adopted for a specific platform.

It's easy to get lost with so many tools to solve seemingly similar problems. The naming that we have for these tools doesn't make it any better. What is container runtime? What is container manager? What is container orchestrator and container engine? Does container orchestrator need a container manager? Where is the line between container runtime and container manager?

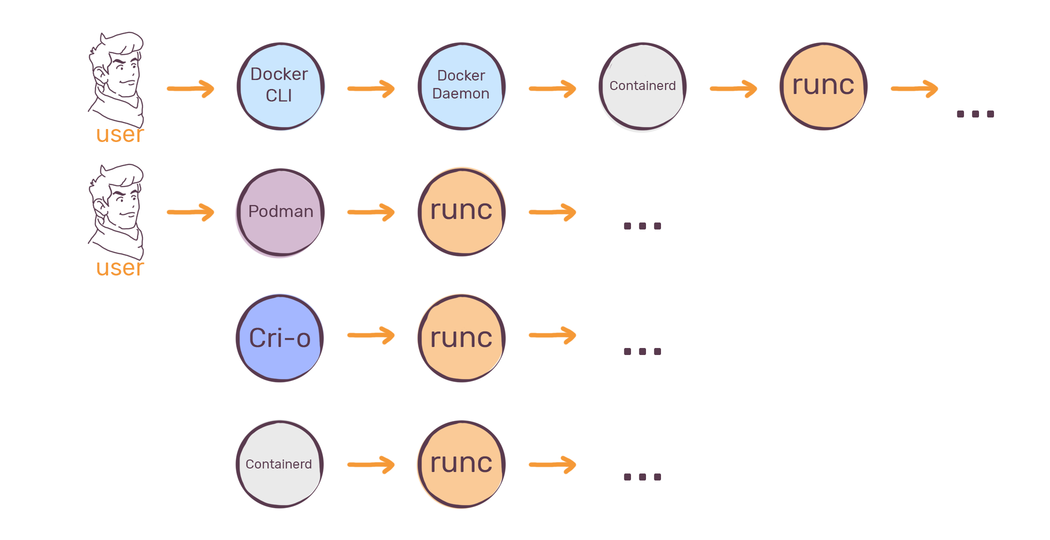

To clear things out a little, let's talk about the tool that is at the core of Docker, Podman, CRI-O and Containerd: runc.

The original container runtime

If one would attempt to draw the chain from the end user down to the actual container process, it could look like this:

As we see, regardless if you are using Docker or Podman or CRI-O, you are, most likely, using runc. Why the "most likely" part? Let's check the man runc:

runc is a command line client for running applications packaged according to the Open Container Initiative (OCI) format and is a compliant implementation of the Open Container Initiative specification

As I explained in Dockerless, part 1: Which tools to replace Docker with and why , there is an Open Containers Initiative (OCI) and specifications for how to run containers and manage container images. runc is compliant with this specification, but there are other runtimes that are OCI-compliant. You can even run OCI-compliant virtual machines.

But the topic for today is runc. Let's see what it is capable of.

Using runc for fun and learning

There is no reason to use runc daily. It's one of those small internal utilities that is not meant to be used directly. But we are going to do it anyway, just to understand a bit better how containers work.

I am running all commands in a clean CentOS 8 installation:

# cat /etc/redhat-release

CentOS Linux release 8.1.1911 (Core)

# uname -r

4.18.0-147.el8.x86_64

To install runc just run yum install runc -y.

runc doesn't have a concept of "images", like Podman or Docker do. You can not just execute runc run nginx:latest. Instead, runc expects you to provide an "OCI bundle", which is basically a root filesystem and a config.json file.

Runc is OCI-spec compliant (to be concrete, runtime-spec), which means it can take OCI bundle and run a container out of it. Worth repeating is that these bundles are not "container images", they are much simpler. Features like layers, tags, container registries and repositories - all of this is not part of the OCI bundle or even of the runtime-spec. There is a separate OCI-spec - image-spec - that defines images.

Filesystem bundle is what you get when you download the container image and unpack it. So it goes like this:

OCI Image -> OCI Runtime Bundle -> OCI Runtime

And in our example it means:

Container image -> Root filesystem and config.json -> runc

Let's build an application bundle. We can start with config.json file, as this part is very easy:

mkdir my-bundle

cd my-bundle

runc spec

runc spec generates a dummy config.json. It already has a "process" section which specifies which process to run inside the container - even with a couple of environment variables.

{

"ociVersion": "1.0.1-dev",

"process": {

"terminal": true,

"user": {

"uid": 0,

"gid": 0

},

"args": [

"sh"

],

"env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"TERM=xterm"

],

...

It also defines where to look for the root filesystem...

...

"root": {

"path": "rootfs",

"readonly": true

},

...

...and multiple other things, including default mounts inside the container, capabilities, hostname etc. If you inspect this file, you will notice, that many sections are platform-agnostic and the ones that are specific to concrete OS are nested inside appropriate section. For example, you will notice there is a "linux" section with Linux specific options.

If we try to run this bundle we will get an error:

# runc run test

rootfs (/root/my-bundle/rootfs) does not exist

If we simply create the folder, we will get another error:

# mkdir rootfs

# runc run test

container_linux.go:345: starting container process caused "exec: \"sh\": executable file not found in $PATH"

This makes total sense - empty folder is not really a useful root filesystem, our container has no chance of doing anything useful. We need to create a real Linux root filesystem.

How to use skopeo and umoci to get an OCI application bundle

Creating rootfilesystem from scratch is a rather cumbersome experience, so instead let's use one of the existing minimal images - busybox.

To pull the image, we first need to install skopeo. We could also use Buildah (see Dockerless, part 2: How to build container image for Rails application without Docker and Dockerfile for gentle introduction to Buildah), but it has way too many features for our needs. Buildah is focused on building images and even has basic functionality to run containers. As we are going as low level as possible today, we will use skopeo:

skopeo is a command line utility that performs various operations on container images and image repositories.

skopeo can copy images between different sources and destinations, inspect images and even delete them. skopeo can not build images, it won't know what to do with your Containerfile. It's perfect for CI/CD pipelines that automate container image promotion.

yum install skopeo -y

Then copy busybox image:

skopeo copy docker://busybox:latest oci:busybox:latest

There is no "pull" - we need to tell skopeo both source and destination for the image. skopeo supports almost a dozen different types of sources and destinations. Note that this command will create a new busybox folder, inside which you will find all of the OCI Image files, with different image layers, manifest etc.

Do not mix up Image manifest and Application runtime bundle manifest, they are not the same.

What we copied is an OCI Image, but as we already know, runc needs OCI Runtime Bundle. We need a tool that would convert an Image to a Bundle. This tool will be umoci - an openSUSE utility with the only purpose of manipulating OCI images. To install it, grab latest release from Github Releases and make it available on your PATH. At the moment of writing, latest version is 0.4.5. umoci unpack takes an OCI image and makes a bundle out of it:

umoci unpack --image busybox:latest bundle

Let's see what's inside the bundle folder:

# ls bundle

config.json

rootfs

sha256_73c6c5e21d7d3467437633012becf19e632b2589234d7c6d0560083e1c70cd23.mtree

umoci.json

We already have config.json, so let's just copy rootfs directory to previously created my-bundle directory. If you are curious, this is the content of rootfs:

bin dev etc home root tmp usr var

If it looks like a basic Linux root filesystem, then you are right.

Running OCI Application bundle with runc

We are ready to run our application bundle as a container named test:

runc run test

What happens next is that we end up in a shell inside a newly created container!

# runc run test

/ # ls

bin dev etc home proc root sys tmp usr var

We run the previous container in a default foreground mode. In this mode, each container process becomes a child process of a long-running runc process:

6801 997 \_ sshd: root [priv]

6805 6801 \_ sshd: root@pts/1

6806 6805 \_ -bash

6825 6806 \_ zsh

7342 6825 \_ runc run test

7360 7342 | \_ runc run test

If I terminate my ssh session to this server, runc process would terminate as well, in the end killing the container process.

Let's inspect this container a bit closer by replacing command with sleep infinite and setting terminal option to "false" inside config.json. runc does not provide whole lot of command line arguments. It has commands like start, stop and run, but the configuration of container is always coming from the file, not from the command line:

{

"ociVersion": "1.0.1-dev",

"process": {

"terminal": false,

"user": {

"uid": 0,

"gid": 0

},

"args": [

"sleep",

"infinite"

]

...

This time let's run container in a detached mode:

runc run test --detach

We can see running containers with runc list:

ID PID STATUS BUNDLE CREATED OWNER

test 4258 running /root/my-bundle 2020-04-23T20:29:39.371137097Z root

In case of Docker, there is a central daemon that knows everything about containers. How is runc able to find our containers? It turns out, it just keeps the state on the filesystem, by default inside /run/runc/CONTAINER_NAME/state.json:

# cat /run/runc/test/state.json

{"id":"test","init_process_pid":4258,"init_process_start":9561183,"created":"2020-04-23T20:29:39.371137097Z","config":{"no_pivot_root":false,"parent_death_signal":0,"rootfs":"/root/my-bundle/rootfs","readonlyfs":true,"rootPropagation":0,"mounts"....

As we run in detached mode, there is no relation between original runc run command (there is no such process anymore) and this container process. If we check in the process table, we will see that parent process of the container is PID 1:

# ps axfo pid,ppid,command

4258 1 sleep infinite

Detached mode is used by Docker, containerd, CRI-O and others. It's purpose is to simplify integration between runc and full-featured container management tools. Worth mentioning is that runc itself is not a library of some sort - it's a CLI. When other tools use runc, they invoke the same runc commands that we just saw in action.

Read more about differences between foreground and detached modes in runc documentation.

While the PID of the container process is 4258, inside the container the PID is shown as 1:

# runc exec test ps

PID USER TIME COMMAND

1 root 0:00 sleep infinite

13 root 0:00 ps

This is thanks to Linux namespaces, one of the fundamental technologies behind what containers really are. We can list all current namespaces by executing lsns on the host system:

NS TYPE NPROCS PID USER COMMAND

4026532219 mnt 1 4258 root sleep infinite

4026532220 uts 1 4258 root sleep infinite

4026532221 ipc 1 4258 root sleep infinite

4026532222 pid 1 4258 root sleep infinite

4026532224 net 1 4258 root sleep infinite

runc took care of process, network, mount and other namespaces for our container process. Let me know in the comments section if you would like an extra article about Linux namespaces.

runc and Podman

One last thing we should verify is if existing container management tools really use runc. I will show you the proof for Podman, you can check the same with Docker on your Linux machine:

yum install podman -y

podman run --name test-podman -d busybox sleep infinite

ps axfo pid,ppid,command | grep runc

The result looks similar to this, with unimportant details left out:

10830 1 /usr/bin/conmon --api-version 1 -s < ... > -r /usr/bin/runc -b /var/lib/containers/storage/overlay-containers < ... >

conmon uses runc as a runtime via -r argument and application bundle specified by -b argument. If we inspect the directory that is passed as bundle, we will see a familiar config.json file, that points to the root filesystem and sets up a ton of different options. And if we check /run/runc directory, we will see the state for this container just as if we would execute runc directly:

# ls /run/runc

55975a011fe5e7aaf20d7d6fc5e41cde608ff51c3df3fed0db0d023149f9308d test

Finally, running runc list will show us the container that podman started, because, in fact, runc starts our containers:

# runc list

ID PID STATUS BUNDLE CREATED OWNER

55975a011fe5e7aaf20d7d6fc5e41cde608ff51c3df3fed0db0d023149f9308d 10842 running /var/lib/containers/storage/overlay-containers/55975a011fe5e7aaf20d7d6fc5e41cde608ff51c3df3fed0db0d023149f9308d/userdata 2020-04-23T21:08:49.016077961Z root

test 4258 running /root/my-bundle 2020-04-23T20:29:39.371137097Z root

The shadow ruler of the container world

Podman, Docker and all the other tools, including most of the Kubernetes clusters that run out there come down to a runc binary that starts the container processes.

In real work you will almost never do the things that I just showed you - unless you are developing your own or existing container tools. You must not assemble application bundles out of container images and you will be much better off using Podman instead of runc directly.

But it is very helpful to know what happens behind the scenes and what is really involved in running a container. There are still many layers between end user and the final container process, but if you have an understanding of the very last layer, then containers will stop being something magic and at times weird. In the end, it's just runc spawning a process within namespaces.

Of course the last layer is the Linux kernel. There is an infinite amount of layers in the universe.

The most important part about runc is that it tracks the OCI runtime-spec. Even though practically every container these days is spawned with runc, it doesn't have to be spawned with runc. You can swap it with any other container runtime that follows the runtime-spec and your container engine like CRI-O is supposed to work the same way.

Higher level tools do not depend on runc per-se. They depend on some container runtime that follows the OCI specification. And this is the really beautiful part about container world these days.

Additional resources

For even more detailed dive into internals of how runc works and how you can develop your own container engine, check out fantastic article(s) Implementing container runtime shim.

Use promo code MKDOCKERLESS for 10% discount on the new Dockerless course by Kirill Shirinkin START COURSE

Article Series "Dockerless"

- Dockerless, part 1: Which tools to replace Docker with and why

- Dockerless, part 2: How to build container image for Rails application without Docker and Dockerfile

- Dockerless, part 3: Moving development environment to containers with Podman

- The tool that really runs your containers: deep dive into runc and OCI specifications