AI Strategy Guide: How to Scale AI Across Your Business

Artificial intelligence and its potential to generate business value date back to the 1950s, yet the level of excitement since the release and viral spread of ChatGPT in late 2022 gives the impression that the translation of AI capabilities into profitable business will happen to a degree previously only dreamed of.

For example, in a 2022 survey, 94% of enterprise respondents say AI is critical to success, with 76% planning slight to significant increases in AI spending. Gartner claims in a 2023 report that by 2026 more than 80% of enterprise businesses will have used generative AI APIs or deployed generative AI-enabled applications.

Among startups and smaller companies, the sense that businesses need AI to compete and profit is similarly rosy, with tech incubator Y-Combinator's president Gary Tan proclaiming "There has never been a better time to start an AI company than now."

Given the promise, why then does the 2023 Gartner Hype Cycle put generative AI (GenAI) as about to enter their "Trough of Disillusionment"?

What about non-generative AI fields like autonomous driving where businesses are closing shop?

Why---anecdotally, at least---are only top tier technology companies able to scale AI across their organization, and only a handful able to claim the same about generative AI?

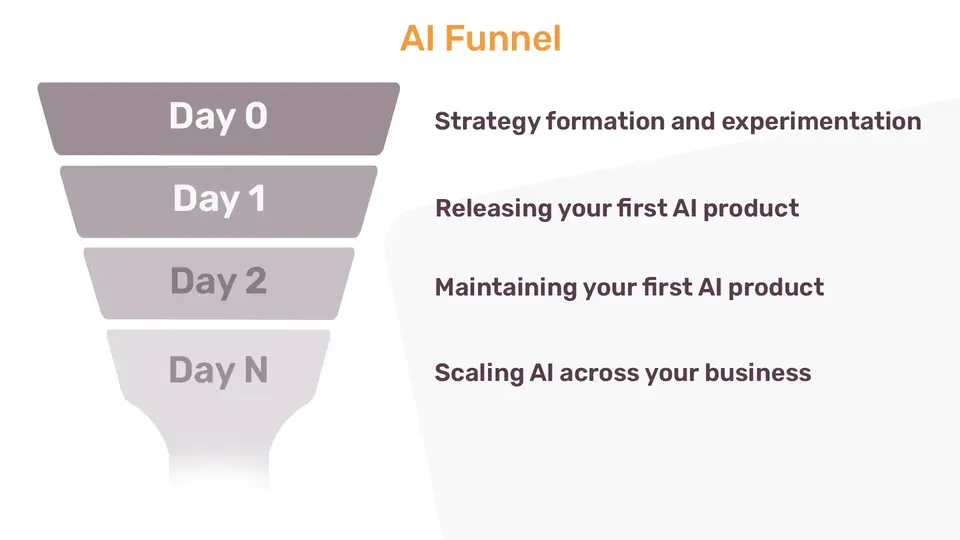

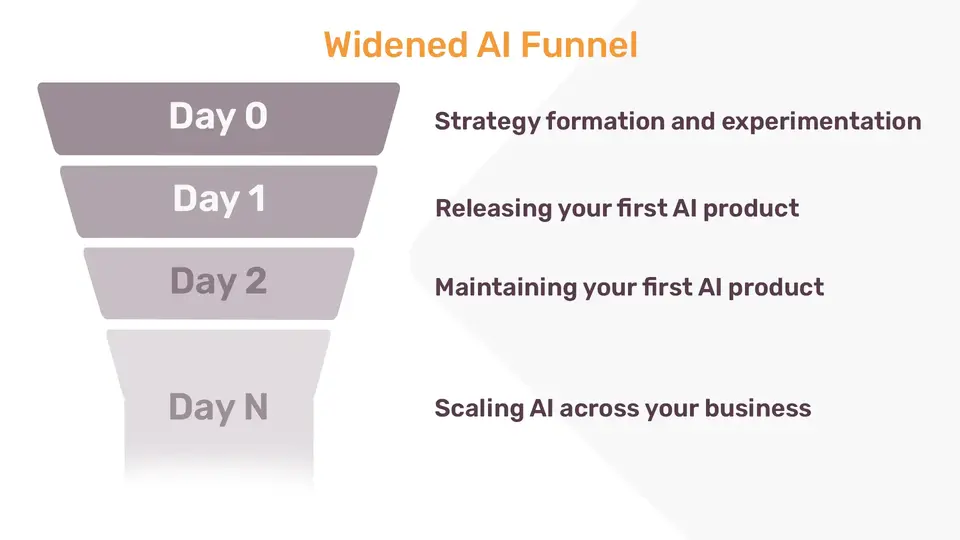

The AI value funnel

All innovation in business faces a funnel-effect in which the number of strategies proposed and initiatives begun dwarfs the number that make it into production in one business domain, with even fewer initiatives being scaled across the business.

Strategy formation and experimentation ("Day 0")

Releasing your first AI product ("Day 1")

Maintaining your first AI product ("Day 2")

Scaling AI across your business ("Day N")

The funnel itself is not AI specific, but to manage the funnel better it is important to keep in mind what makes AI different from other software and innovation topics. Specifically, AI features

a strong dependency on historical data,

a reliance on complicated, often inscrutable ("black box") algorithms (e.g. machine learning models)

There is no universal recipe for ensuring your business profits robustly and sustainably from AI, but there are principles and practices that will help you open up the AI funnel. Following these principles and practices mean more of your strategic goals are met by more projects progressing faster into production in one or more business domains.

Day 0: Formulate an AI strategy with targeted experimentation

If you have been around IT long enough, you've likely come across the opinion that planning is old-school, as "waterfall" methodologies are out, while rapid iterations and pivots are the preferred, agile way to deliver innovative software. In short, don't plan, just try stuff out and adapt.

On the other extreme, you've probably also seen projects (global data warehouse anyone?), that take so long gathering requirements that by the time the first release rolls around, the businesss needs have changed or the planning team misunderstood key needs, making the release obsolete and the effort largely wasted.

On the level of theory, agile requires planning (see e.g. around minute 35 of this Bob Martin keynote) and the original waterfall recognizes the value of feedback and iterations (see Figure 3 of the original paper).

In practice, what's needed is a balance of exploiting what you already know---your market and business offering, top-management-level priorities and others---against exploring unknowns with targeted experimentation.

- finding the balance between management level, top-down and boots-on-the-ground, bottom-up innovation

- hiring the right AI talent at the right time

These two points belong together, and in the order listed, though there should be iterations through the two. At the risk of killing suspense, unless you have top management support for AI, you may be able to hire AI talent, but you won't be able to retain it. Your business needs to have a solid sense of where AI should help and why your business needs at least one management sponsor. Many of us like the scrappy underdog stories about some low-level worker with a great idea who, through perseverance and some genius, overcomes management resistance and brings her innovation to life (and profit). But if it's my job or company on the line, I'd save these stories for weekend streaming and instead go with a solid AI management sponsor or three.

Once there is a recognized need and sponsor, then the first spend on AI should be on the right AI talent. Note that we don't say "top talent" (even if that phrase could me made precise). If there isn't a fit between your business, your AI management sponsors' ambitions, your current IT stack and your first hire, then having a Kaggle master (i.e. someone who is really good at getting top performance on machine learning tasks) may not be the right talent for you. Whether acquiring your first AI talent is better done with a permanent hire or via outsourcing (e.g. from an AI consultancy) depends on your business. I have seen both approaches work well and also go badly.

Before moving on, the main reason your business needs both a management sponsor and initial AI talent comes down to information asymmetry. The explosion of technology and techniques within AI makes separating wheat from chaff a real challenge. To use Gregor Hohpe's metaphor, you need the penthouse and the engine room to work together to obtain value from AI.

As humans we are especially prone to make bad choices in the face of information assymetry due to what is called the substitution or conjunction fallacy. This fallacy involves subconsciously substituting a hard problem for an easier one, leading to overconfidence in finding a solution. Vendors of AI and other services use this fallacy frequently (knowingly or not) to their advantage, and usually to the customer's disadvantage. Unless you can assess how hard your AI problem is from the business, data and AI standpoints, you are headed for expensive trial-and-error.

With these first two ingredients covered, your business is ready for next steps in formulating an AI strategy, such as

- enabling your business to test the value of AI rapidly and responsibly on use cases

- deciding on buy vs build

- deciding not to decide (yet) on certain key topics

There's been talk about democratizing AI for some time now, with many low-code and auto-ML offerings (if unfamiliar, "auto-ML" is roughly giving a tool your business data, and having the tool figure out the best AI model for your task). A novelty of ChatGPT and its successors is that the barrier to entry for AI experimentation is even lower. At a startup I worked for, I ran biweekly "what did you do with ChatGPT" sessions to both encourage and harness the creative potential of non-technical experts trying out AI.

The benefit of enabling AI experimentation across your business is that you increase the chance of finding novel use-cases without the bottleneck of your AI team's capacity. It's important to not forget by now standard AI use cases such as recommendation engines, anomaly detection, cross- and up-sell, task automation and---especially with the explosion of GenAI interest---automated generation of text or images. I like even more the business specific uses of AI, like automating low-level website tasks for your business using GPT-4 Vision (text version here), or emergent AI capabilities like GenAI's ability to extract structured data from inhomogeneous unstructured documents. (By "emergent" I mean not something that the model was explicitly trained to do).

Governance and ethics come up more in Days 1 and beyond below, but suffice it to say you'll already need guardrails if you have your employees experimenting with AI that goes beyond Excel spreadsheets. A primary risk in Day 0 is data breach or leakage, such as the Samsung developer who sent ChatGPT proprietary code.

Any significant buy-vs-build decisions are another area where you both an AI management sponsor and your own AI talent. Without guidance from your AI sponsor, AI talent risks going for tech sophistication or massive DIY work that either isn't needed yet or isn't suited to the pressing business problems. And without input from AI talent, AI sponsors are at risk of signing off on expensive vendor offerings whose technical limitations mean not delivering on your AI business goals, or whose features outstrip actual technical needs. Anyone for streaming, real-time analytics when weekly reports are good enough?

Lastly, your business needs the AI sponsor and talent to together have the courage to decide not to decide on some key issues. Principal among these are big platform decisions. A close second is big hiring decisions. In both cases, it's usually best to go with the minimal viable platform and team until you have reached at least Day 1 of deploying your first AI-powered product.

Day 1: Move quickly and robustly from scoping to production for your first AI product

Sure, there is an element of luck to successfully moving your AI product from scoping and initial prototype into production, but as the baseball manager Branch Rickey said, "Luck is the residue of design".

Having an initial success means you can showcase it to the rest of your business, which increases your AI team's next-round budget potential, not to mention your AI team's motivation and job satisfaction.

Day 1 ends with launching your AI product. But what are the stages that get you to launch? There are various presentations of the AI (or ML or data science) lifecycle. We'll use one from a previous team of mine that has the charm of being conceptually clean, respecting separation of concerns ("who is best at doing what") and being readily translatable into system architecture and implementation.

After initial scoping is completed, the lifecycle stages are

data ingestion

model creation

model execution

logging and monitoring

Before moving on, let's spill some ink on scoping. It's tempting for techies to rush past the scoping to get on to the implementation fun, but the maxim that finding product-market fit is key for startups applies on the micro, project level as well. Solving the wrong problem is always a waste, no matter how "agile" your team may be.

There is a whole literature on scoping, both macro and micro. For now, I'd like to point out two aspects that have delivered significant benefits in my teams.

- Make relevant domain knowledge explicit: Powerpoint with boxes and arrows are never enough. Actual (AWS-style) prose and diagrams (e.g. activity diagrams) pay dividends.

- Match technical metrics to the key business metric: This may seem a no-brainer, yet achieving 99% accuracy will only impress business colleagues for so long unless your technical metric is (positively) moving a recognized business KPI.

I have yet to see an AI project that ignored one of these two and delivered on target.

Data ingestion is about getting business data from its source to the model creation team (and its infrastructure) in the best form for them. Note: I do not include business, domain-logic related data "cleansing" in this phase, as this work belongs to feature engineering of the next phase ...

Model creation is the phase that is most distinctive for AI compared to other software development. It's also what most of us think of when we hear about AI or data science development work. This stage includes exploratory data analysis, feature engineering, and model selection via your chosen model evaluation methodology. The outcome of this phase is a model artifact that can be passed on to ...

Model execution is about making your AI model available to the end user. Great results only your laptop or Google Colab notebook won't cut it in a business. The AI model has to integrate with your business IT if it's going to deliver value.

Logging and monitoring is the other particularly AI-distinctive stage, as both deployed model performance and the incoming input data its evaluating need to be considered statistically, not only case-by-case. Recall what makes AI distinct from other software engineering. The distribution of data you used in creating your model might have drifted over time in the deployed setting (point 1). Even if you don't experience data drift, in production your model might be reacting to inputs it hasn't seen before. Point 2 means that understanding your AI model's behavior requires special care. This stage is arguable a stage for Day 2, but if you don't get started in your initial dev work, pain is awaiting you.

Beyond the order of the AI lifecycle: human and machine interfaces

On the human side, benefits of thinking in terms of a Day 1 lifecycle are obvious: team-members can focus on their main area of expertise, without having to become too much of an expert in adjacent areas. This is the human flavor of "separation of concerns" in software engineering.

Data ingestion is primarily for data engineers, model creation primarily data scientists with support of ML engineers, model execution and logging / monitoring will be carried out mostly by ML or even non-ML engineers. Each uses the work of the others to do their job, while being spared the others' heavy lifting.

For this human benefit to come to fruition, however, the interfaces ("handovers") from one stage to the next must be well-designed.

Iterations among the lifecycle stages

Of course there is a feedback loop from logging and monitoring of deployed model results back to the early phase(s), as e.g. with CRISP-DM and it's machine learning adaptations. A significant benefit of well-designed interfaces between your lifecycle stages means having tight feedback loops of adjacent stages without tight coupling of the implementation.

Some Day 1 subtopics not to forget

Additional subtopics in Day 1 work include

- The importance of making domain knowledge explicit in scoping

- Moving along the AI / data science lifecycle

- Ensuring your workflows and product comply with regulatory and ethical mandates

- Minimizing painful data surprises with data contracts

- Maximizing developer momentum by with pragmatic testing and monitoring

Day 2: Maintain and improve your AI products over time

Your business has at least one AI product running production. Now how do you manage the two sources of AI volatility in the wild, namely, the strong dependency on historical data and often black-box model under the hood?

Making it to and through Day 2 involves among other things

- Taming AI's inherent volatility with monitoring and observability

- MLOps: finding the right level of automation for your AI models and source data

- DataOps: The many flavors of data changes, and how to handle them

As far as I can tell, "observability" is just the desired outcome of good old-fashioned monitoring, with the added benefit of novelty to help with marketing for its vendors. You want to know in sufficient detail and with sufficient timeliness what your deployed AI is doing with its live input data streams.

At a bare minimum, the technical and business metrics that you used to decide your AI was good enough to deploy ("Day 1") need to be regularly tracked. The next thing to monitor is the data being fed into your deployed AI. How does it differ from the data used to train, fine-tune or at the very least choose your AI variant over other options (e.g. model families or architectures) you could have used to solve your business problem? How one dataset differs from another can be answered in hundreds of different ways, so it's important to know which data surprises are most expensive, and how to track them. If you've already set up data contracts in the development work from Day 1, you'll already be off to a good start.

As with finding the right talent, finding the right level of automation for your AI work is more important than focusing on the best and shiniest. I like Google's MLOps levels as a taxonomy for figuring out how much automation is right for your business.

MLOps level 0 is the mainly manual approach. You may already have well designed interfaces between the stages of the AI lifecycle, yet the transition from one to the next is performed by humans, not machines. Even within a lifecycle stage it might make sense to have manual checks or steps while you are figuring out what to automate and what not.

Level 1 of MLOps is when you've put each lifecycle stage and their intefaces in an automated pipeline. The pipeline could be a python or bash script, or it could be a directed acyclic graph run by some orchestration framework like Airflow, dagster or one of the cloud-provider offerings. AI- or data-specific platforms like MLflow, ClearML and dvc also feature pipeline capabilities.

Here we can already see the benefit of viewing these stages in terms of fit for your business and not merit badges to be earned or summits to be climbed. Each of the above pipelining tools is easy to start using---that's part of their sales pitch and why they all have really good 'Getting Started' guides. Knowing which framework is best for your business and its AI ambitions requires hands-on experience, e.g. the manual work of level 0. As a side benefit, having enough experience at level 0 before moving to pipeline automation tends to result in code and architecture that is less bound to one particular pipeline tool, meaning less vendor lock-in.

The final level in Google's taxonomy is CI / CD ("continuous integration, continuous delivery or deployment") at level 2. At this stage, all aspects of developing and deploying your AI product that could and should be automated are. Code changes trigger new versions of your AI product which are evaluated according to your technical and business metrics. If they pass, a new version is ready to be released into production. Data updates and model retraining can also be automated, leading again to a new release candidate.

Note the word "should" above. Depending on your business and specific application domain, there will likely be steps that you would choose not to automate even if technically feasible. As I mentioned in this mkdev podcast, the more business logic your AI product has, as compared to business logic light tasks like counting cars in a parking lot using computer vision or speech transcription, the more likely you'll want some releases to be manually reviewed.

DataOps is like MLOps in that it's taken DevOps from normal software development and adapted it to not-so-standard (abnormal?) software development, in this case data engineering. As we mentioned above, one factor that makes AI different from regular software development is the strong dependency on historical datasets. Unlike with transactional software where enumerated test cases usually suffice to know your application is working as intended, AI applications are trained and the re-trained on large datasets that cannot be inspected and checked data record by record. Again, it will be up to your business and its AI team to decide what level of data automation is right.

Day N: Scale AI across your business

Donald Knuth famously said in the context of algorithm performance, "Premature optimization is the root of all evil." The same warning tends to apply to horizontal scaling, by which we mean making an offering (in this case, the development, deployment and maintenance of AI products) available across your business domains. (Paul Graham also warns about premature scaling attempts in his essay Do Things That Don't Scale.)

Hence the question of "when" is the right term to invest in scaling AI across your business will vary from business to business, though it's likely safe to put a lower bound using the rule of 3: until you have at least 3 AI products in production that are consistently delivering value, it is too early to invest in the platforms and teams required to scale.

Once your business has achieved enough success and experience with deploying and maintining AI products, then you are likely ready to double down and invest in the infrastructure and people-power to extend AI across your remaining business domains.

Scaling AI presents not only technical but also social challenges. Getting a cross functional product team to work together well is hard; solving the human engineering challenges of differing incentives, experience levels and even personality across an organization is much harder. It should come as no surprise that the analytical data-scaling paradigm Data Mesh is defined in as a "sociotechnical approach" (Chapter 1 of Data Mesh: Delivering data-driven value at scale).

Here are three topics that you will need to face in order to succeed on Day N.

- Respecting the main ingredient of AI, data: data warehousing, governance and products

- Automating regulatory and ethical compliance

- Enabling your AI teams to play to their strengths

Rapid AI experimentation from Day 0 (e.g. with GenAI) meant avoiding the human bottleneck of needing your AI team for everything. Likewise, scaling AI across your organization will require solving the capacity bottleneck of your AI team through good design and automation as well as organization structure and dynamics.

As you may have already noticed, the above topics can be seen as AI flavored platform engineering, i.e. the follow-up from DevOps that focuses on (AI) developer experience via shared assets and interfaces.

A corollary of the AI funnel is that relatively few businesses make it to Day N with AI. Scaling is easy to say yet hard to do (see Trick 6 of Sarah Cooper's 10 Tricks to Appear Smart During Meetings). The challenge is to know the when and the how. Scaling work will be more of a "hockey stick" payoff than the previous stages, with some work yielding no business-observable benefit (the shaft of the hockey stick) before you start to reap the benefits (the blade of the hockey stick). The experience your business will have gained already in days 0, 1 and 2 means that the management, business and technical teams will be working together to ensure your AI platform work delivers.

Widen your AI funnel for sustainable innovation and value

Solutions and technology choices arise and fade quickly, especially in AI. Robust design principles and clear mental models, on the other hand, have a staying power and utility that tends to long outlast tech fads. Make sure your business is equipped to widen the AI funnel so you can harness the excitement around AI to deliver sustainable value.